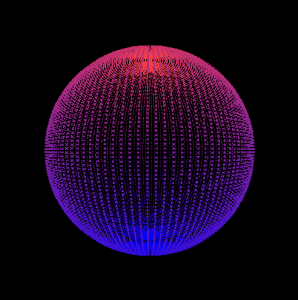

Simon: This week, with some help I got Fmod to work in the Discover system. The simulation now plays music and has a basic spectrum visualizer working.

Simon: This week, with some help I got Fmod to work in the Discover system. The simulation now plays music and has a basic spectrum visualizer working.

Tim: I looked at the code for the texture packs.

Chelsi: I got video coverage and started to compile which videos we will alternate through in the video.

Accomplishments: This week we got the music analysis to work in real time. Also, we now have video footage to play in the background of the simulation. The model is simple now but can be made more complex later.

Problems: Everything actually went pretty smoothly this week. Learning textures in OpenGL is still challenging but otherwise mostly everything we want to do is implemented successfully.

Schedule: We are on schedule now that the music analyzer is working. Until class is over we will update what we have to make it fancier.

Next week: Put the video in the simulation, and add more details to the visualizer.