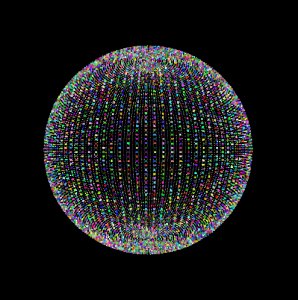

1. For our project we developed a music visualizer to the song ‘Hack’ by Sam Armus. The experience is immersive, taking advantage of virtual reality to surround the user with the visualizations. The program combines recorded video with a basic spectrum analyzer generated from any music file the user chooses. The video files are played using the video object code provided by Kevin. The music analysis is done using Fmod. The visuals are generated using the spectrum data and drawn using OpenGL. The rest of the program is based on the default Discover system code.

The project uses the Discover system to make a music visualizer that uses the design of the system to create a 180-degree viewing field of the visualization. In the center of the screen, columns of squares represent the different frequencies, and the longer the column, the more prominent that frequency.

In addition to the spectrum we created a custom video for the track we selected, making this project more specific to a certain song. The video compilation is live footage from shows at Moogfest 2014. We played around with the coloring of the video, inverting the spectrum at certain points to create a more immersive environment for the users.

2. Simon’s role on the project was to code up the program, including the graphical elements. This involved learning basics of OpenGL and adapting some Fmod tutorial code to work with the rest of our program. I also helped come up with concepts for what the program would do near the start of the project.

Tim mainly dealt with trying to find different aspects of the song to analyze. He found a potential program to use, and while it had a lot of potential and options for us, in the end the program wasn’t exactly what we were looking for. Other than this, he helped brainstorm ideas for the visualization at the beginning of the project.

Chelsi took live footage for the video and compiled them for the background of the visualizer. The video was created with iMovie, utilizing the filters and transitions within the program to create something that encompassed the feeling we were trying to get across. The video is approximately 7:30 long, and is separate from the spectrum and music tracking. The video loops at the end, allowing the user to experience the visualizer for as long as they’d like.

3. We are happy about how the project turned out. Many elements of its design turned out to be more complicated than we had hoped, but we had also planned for this and had many simpler ideas to fall back on. OpenGL and the music analysis were the trickiest aspects to successfully implement. The end result we think was not too tricky to design but still is effective in achieving our original goal, to make a cool virtual reality music visualizer.

In addition we think it’s great that we got to incorporate multiple aspects within the visualizer, including the song, spectrum and video. We are extremely happy with how the video turned out, because that was something we were very unsure of at the start. Overall the final product exceeded all of our expectations and we are proud of the end result.

4. Learning how to code things using OpenGL was tricky at first, especially because it was hard to test the code at home. We couldn’t find a good C++ development tool to use on my home computer, and even with one that works it’s necessary to test the code out in the Discover system itself to know if it is effective. The music analysis also took a long time to figure out, but we found an effective method by the end of the project.

We also had a very difficult time trying to understand sonic visualizer, the program we were going to use to analyze the music. We think that it is designed for something more than what we needed, but there were a lot of really interesting features that could have been implemented. However, the program was designed for someone with more background in this area, and the Fmod worked very well as a replacement.

5. The final project did not have as much interactivity as the initial idea, but otherwise I think it remained relatively faithful. We did not have enough time or knowledge to implements music that changes as the user interacts with the virtual scene; additionally, without having the project files for the music we used this would have been even more difficult.

One addition we would have liked to make would be if the user could have moved around in the space, and being in different spaces caused different parts of the song to become more prominent. Also to have a greater variety of shapes within the spectrum, but we are happy to at least have color responses within.

We also had some issues in the beginning of how to start off – none of us had ever worked on a project like this and were hesitant to begin without knowing much about the process. In the end we were all able to find a part in the project and put everything together successfully.

6. With more time, we think making the environment more interactive would be exciting. Additionally, making more different types of visualizers and including more kinds of recorded video and music would give the program much more variety. We would have also liked to implement some of the information we could get from the sonic visualizer, but that would change the way the visualizer was set up significantly. Another plan would be adding more interactivity with movement and audio changes as a result of that movement. There could be some change that happens as a result of a gesture or hand wave.

Overall having more time to pay attention to details and perfect the visualizer more would have been beneficial. We built our time structure off of creating a simple machine and adapting it as time allotted, so there is still the possibility to improve even after our final presentation