For a complete list of publications visit my google scholar page at: http://scholar.google.com/citations?user=SpLMYBMAAAAJ&hl=en

Additionally information can be found on my research gate profile at: https://www.researchgate.net/profile/Kevin_Ponto/

Selected Publications

IEEEVR 2019

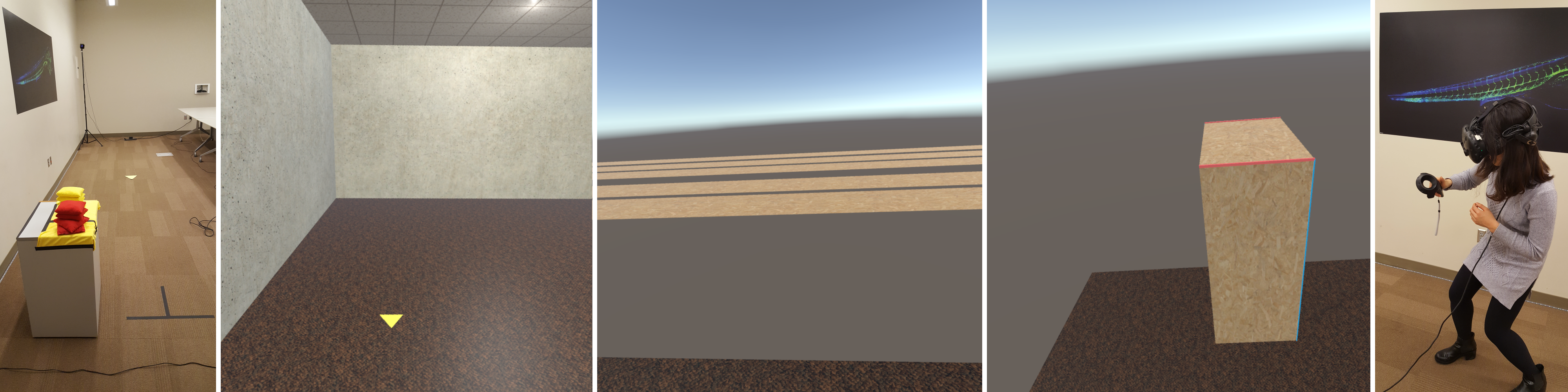

Viewers of virtual reality appear to have an incorrect sense of space when performing blind directed-action tasks, such as blind walking or blind throwing. It has been shown that various manipulations can influence this incorrect sense of space, and that the degree of misperception varies by person. It follows that one could measure the degree of misperception an individual experiences and generate some manipulation to correct for it, though it is not clear that correct behavior in a specific blind directed action task leads to correct behavior in all tasks in general. In this work, we evaluate the effectiveness of correcting perceived distance in virtual reality by first measuring individual perceived distance through blind throwing, then manipulating sense of space using a vertex shader to make things appear more or less distant, to a degree personalized to the individual’s perceived distance. Two variants of the manipulation are explored. The effects of these personalized manipulations are first evaluated when performing the same blind throwing task used to calibrate the manipulation. Then, in order to observe the effects of the manipulation on dissimilar tasks, participants perform two perceptual matching tasks which allow full visual feedback as objects, or the participants themselves, move through space.

VAR4Good Workshop in IEEEVR 2018

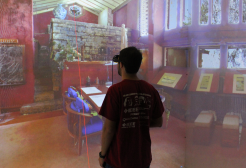

Managing one’s own healthcare has been progressively changing from occurring at hospitals and healthcare facilities to one’s own day–to–day living environment. Due to this change, studying how people care for themselves becomes more challenging as visiting people in their living environments is intrusive and often logistically challenging. LiDAR scanning technology allows for highly detailed capture and generation of 3D models. With proper rendering software, virtual reality (VR) technology enables the display of LiDAR models in a manner that provides immersion and presence of feeling like one is situated in a scanned model. This document describes the combination of using LiDAR and VR technology to enable the study of health in the home. A research project involving the study of how home context affects diabetic patients’ ability to manage their health information in the home is presented as an example. The document finishes by discussing other potential health care applications that could use similar methodologies as those introduced to improve health in the home for additional chronically ill populations.

IEEEVR

The alignment of virtual and real coordinate spaces is a general problem in virtual reality research, as misalignments may influence experiments that depend on correct representation or registration of objects in space. This work proposes an automated alignment and correction for the HTC Vive tracking system by using three Vive Trackers arranged to describe the desired axis of origin in the real space. The proposed technique should facilitate the alignment of real and virtual scenes, and automatic correction of a source of error in the Vive tracking system shown to cause misalignments on the order of tens of centimeters. An initial proof-of-concept simulation on recorded data demonstrates a significant reduction of error.

Simulating the Experience of Home Environments

ICVR

Growing evidence indicates that transitioning patients are often unprepared for the self-management role they must assume when they return home. Over the past twenty five years, LiDAR scanning has emerged as a fascinating technology that allows for the rapid acquisition of three dimensional data of real world environments while new virtual reality (VR) technology allows users to experience simulated environments. However, combining these two technologies can be difficult as previous approaches to interactively rendering large point clouds have generally created a trade-off between interactivity and quality. For instance, many techniques used in commercially available software have utilized methods to sub-sample data during interaction, only showing a high-quality render when the viewpoint is kept static. Unfortunately, for displays in which viewpoints are rarely static, such as virtual reality systems, these methods are not useful. This paper presents a novel approach to the problem of quality-interactivity trade-off through a progressive feedback-driven rendering algorithm. This technique uses reprojections of past views to accelerate the reconstruction of the current view and can be used to extend existing point cloud viewing algorithms. The presented method is tested against previous methods, demonstrating marked improvements in both rendering quality and interactivity. This algorithm and rendering application could serve as a tool to enable virtual rehabilitation within 3D models of one’s own home from a remote location.

Evaluating Perceived Distance Measures in Room-Scale Spaces using Consumer-Grade Head Mounted Displays

3D User Interfaces (3DUI), 2017 IEEE Symposium on,

Distance misperception (sometimes, distance compression) in immersive virtual environments is an active area of study, and the recent availability of consumer-grade display and tracking technologies raises new questions. This work explores the plausibility of measuring misperceptions within the small tracking volumes of consumer-grade technology, whether measures practical within this space are directly comparable, and if contemporary displays induce distance misperceptions.

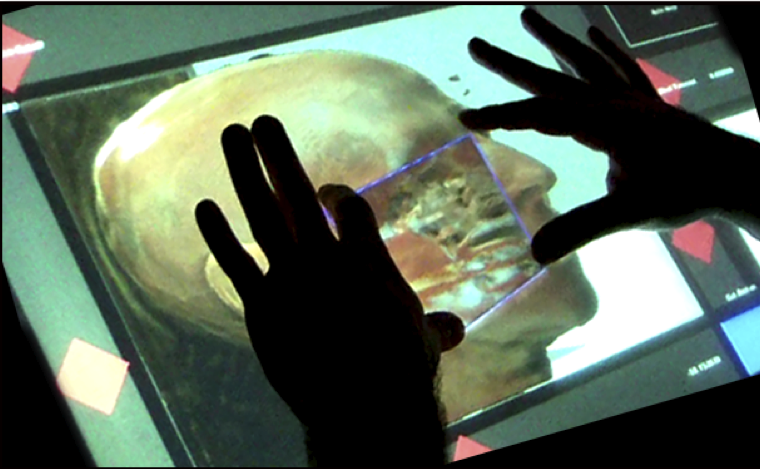

Uni-CAVE: A Unity3D Plugin for Non-head Mounted VR Display Systems

IEEEVR

2015. In Print.

Unity3D has become a popular, freely available 3D game engine for design and construction of virtual environments. Unfortunately, the few options that currently exist for adapting Unity3D to distributed immersive tiled or projection-based VR display systems rely on closed commercial products. Uni-CAVE aims to solve this problem by creating a freely-available and easy to use Unity3D extension package for cluster-based VR display systems. This extension provides support for head and device tracking, stereo rendering and display synchronization. Furthermore, Uni-CAVE enables configuration within the Unity environment enabling researchers to get quickly up and running.

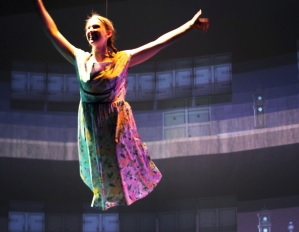

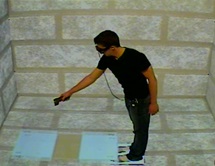

Designing Extreme 3D User Interfaces for Augmented Live Performances

3D User Interfaces (3DUI), 2016 IEEE Symposium on,

This paper presents a proof-of-concept system that enables the integrated virtual and physical traversal of a space through locomotion, automatic treadmill, and stage based flying system. The automatic treadmill enables the user to walk or run without manual intervention while the flying system enables the user to control their height above the stage using a gesture-based control scheme. The system is showcased through a live performance event that demonstrates the ability to put the actor in active control of the performance. This approach enables a new performance methodology with exciting new options for theatrical storytelling, educational training, and interactive entertainment.

SafeHOME: Promoting Safe Transitions to the Home

Studies in health technology and informatics

v. 220 p. 51 2016

This paper introduces the SafeHome Simulator system, a set of immersive Virtual Reality

Training tools and display systems to train patients in safe discharge procedures in captured

environments of their actual houses. The aim is to lower patient readmission by significantly

improving discharge planning and training. The SafeHOME Simulator is a project currently

under review.

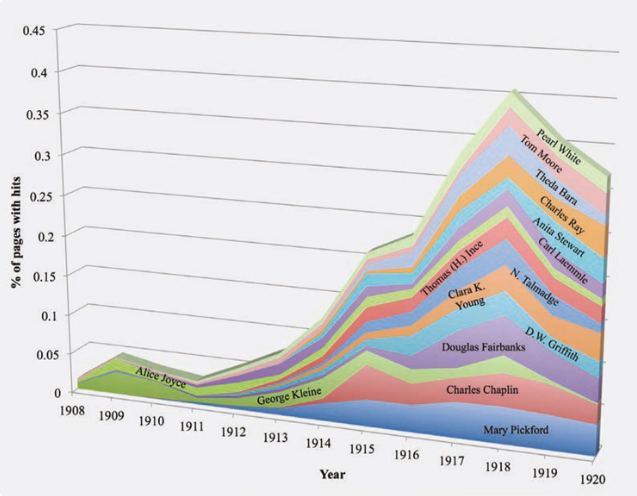

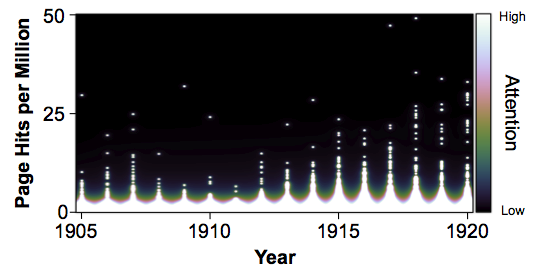

Who’s Trending in 1910s American Cinema?: Exploring ECHO and MHDL at Scale with Arclight

The Moving Image

v. 16 n. 1 p. 57-81 2016

Over the last decade, digital humanities scholars have challenged a diverse set of academic

disciplines to reevaluate traditional canons and histories through the use of computational

methods. Whether we choose to call such approaches “distant reading”(as Franco Moretti

does),“macroanalysis”(as per Matthew Jockers), or simply “text mining,” the basic

intervention of these digital methods remains the same: presenting opportunities to harness

the power of scale, shifting our attention away from a small number of canonical texts and …

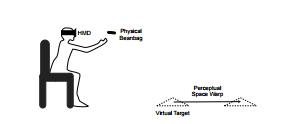

Perceptual Space Warping: Preliminary Exploration

IEEEVR

2016

Distance has been shown to be incorrectly estimated in virtual environments relative to the same estimation tasks in a real environment. This work describes a preliminary exploration of Perceptual Space Warping, which influences perceived distance in virtual environments by using a vertex shader to warp geometry. Empirical tests demonstrate significant effects, but of smaller magnitude than expected.

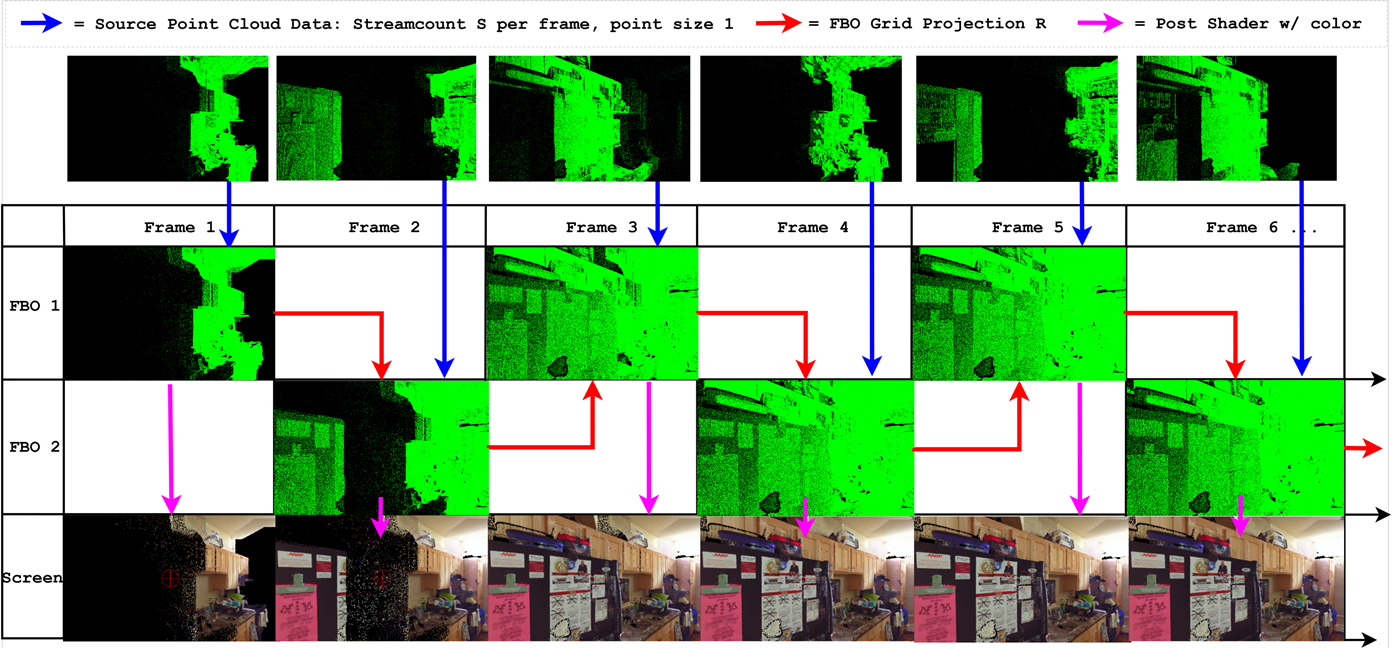

Progressive Feedback Point Cloud Rendering for Virtual Reality Display

IEEEVR

2016

Previous approaches to rendering large point clouds on immersive displays have generally created a trade-off between interactivity and quality. While these approaches have been quite successful for desktop environments when interaction is limited, virtual reality systems are continuously interactive, which forces users to suffer through either low frame rates or low image quality. This paper presents a novel approach to this problem through a progressive feedback-driven rendering algorithm. This algorithm uses reprojections of past views to accelerate the reconstruction of the current view. The presented method is tested against previous methods, showing improvements in both rendering quality and interactivity.

Transient Motion Groups for Interactive Visualization of Time-Varying Point Clouds

IEEE Aerospace

DOI

10.1007/s10055-014-0254-0.

Physical simulations provide a rich source of time-variant three-dimensional data. Unfortunately, the data generated from these types of simulation are often large in size and are thereby only experienced through pre-rendered movies from a fixed viewpoint. Rendering of large point cloud data sets is well understood, however the data requirements for rendering a sequence of such data sets grow linearly with the number of frames in the sequence. Both GPU memory and upload speed are limiting factors for interactive playback speeds. While previous techniques have shown the ability to reduce the storage sizes, the decompression speeds for these methods are shown to be too time and computation-intensive for interactive playback. This article presents a compression method which detects and describes group motion within the point clouds over the temporal domain. High compression rates are achieved through careful re-ordering of the points within the clouds and the implicit group movement information. The presented data structures enable efficient storage, fast decompression speeds and high rendering performance. We test our method on four different data sets confirming our method is able to reduce storage requirements, increase playback performance while maintaining data integrity unlike existing methods which are either only able to reduce file sizes or lose data integrity.

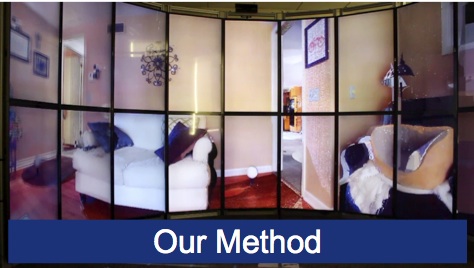

Experiencing Interior Environments: New Approaches for the Immersive Display of Large-Scale Point Cloud Data

IEEEVR

2015. In Print.

This document introduces a new application for rendering massive LiDAR point cloud data sets of interior environments within high- resolution immersive VR display systems. Overall contributions are: to create an application which is able to visualize large-scale point clouds at interactive rates in immersive display environments, to develop a flexible pipeline for processing LiDAR data sets that allows display of both minimally processed and more rigorously processed point clouds, and to provide visualization mechanisms that produce accurate rendering of interior environments to better understand physical aspects of interior spaces. The work introduces three problems with producing accurate immersive rendering of Li- DAR point cloud data sets of interiors and presents solutions to these problems. Rendering performance is compared between the developed application and a previous immersive LiDAR viewer.

The Living Environments Laboratory

IEEEVR

2015. In Print

To accelerate the design of home care technologies, the Living En- vironments Laboratory uses a 6-sided CAVE and other visualization spaces to re-create every home environment on earth. We employ a LiDAR to capture actual home environments; the point clouds are then processed to enable the virtual renditions of these spaces to be experienced in CAVE and head-mounted display systems. Our basic research focus is on improving the virtual reality experience through better rendering techniques, natural interfaces (e.g. spo- ken, gesture) and more precise calibration of displays. Finally, the LEL has demonstrated a deep commitment to outreach in a variety of ways, such as through dance performances, art installations, and public events which have showcased the lab to over 3,500 citizen visitors in the three years since the lab has been open.

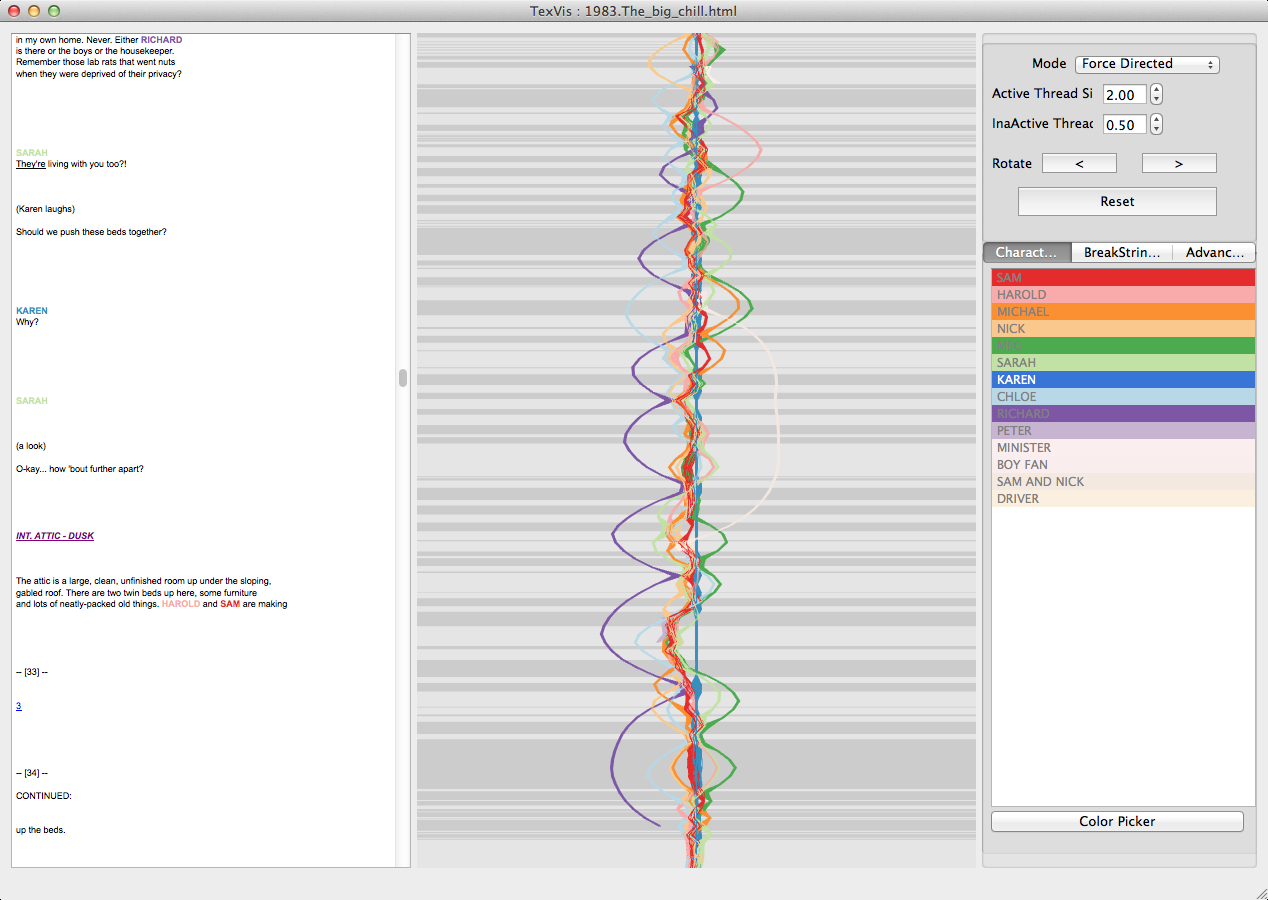

Visualizing and Analyzing the Hollywood Screenplay with ScripThreads

Digital Hummanities Quarterly

2014. Volume 8 Number 4

Of all narrative textual forms, the motion picture screenplay may be the most perfectly pre-disposed for computational analysis. Screenplays contain capitalized character names, indented dialogue, and other formatting conventions that enable an algorithmic approach to analyzing and visualizing film narratives. In this article, the authors introduce their new tool, ScripThreads, which parses screenplays, outputs statistical values which can be analyzed, and offers four different types of visualization, each with its own utility. The visualizations represent character interactions across time as a single 3D or 2D graph. The authors model the utility of the tool for the close analysis of a single film (Lawrence Kasdan’s Grand Canyon [1991]). They also model how the tool can be used for “distant reading” by identifying patterns of character presence across a dataset of 674 screenplays.

DSCVR: designing a commodity hybrid virtual reality system

Virtual Reality

DOI

10.1007/s10055-014-0254-0.

This paper presents the design considerations, specifications, and lessons learned while building DSCVR, a commodity hybrid reality environment. Consumer technology has enabled a reduced cost for both 3D tracking and screens, enabling a new means for the creation of immersive display environments. However, this technology also presents many challenges, which need to be designed for and around. We compare the DSCVR System to other existing VR environments to analyze the trade-offs being made.

and Response to Critiques of Big Humanities Data Research

IEEE Big Humanities Data workshop

In Print.

Search has been unfairly maligned within digital humanities big data research. While many digital tools lack a wide audience due to the uncertainty of researchers regarding their operation and/or skepticism towards their utility, search offers functions already familiar and potentially transparent to a range of users. To adapt search to the scale of Big Data, we offer Scaled Entity Search (SES). Designed as an interpretive method to accompany an under-construction application that allows users to search hundreds or thousands of entities across a corpus simultaneously, in so doing restoring the context lost in keyword searching, SES balances critical reflection on the entities, corpus, and digital with an appreciation of how all of these factors interact to shape both our results and our future questions. Using examples from film and broadcasting history, we demonstrate the process and value of SES as performed over a corpus of 1.3 million pages of media industry documents.

Hierarchical Plane Extraction (HPE): An Efficient Method For Extraction Of Planes From Large Pointcloud Datasets

ACADIA 2014

In Print.

Light Detection And Ranging (LiDAR) scanners have enabled the high fidelity capture of physical environments. These devices output highly accurate pointcloud datasets which often comprise hundreds of millions to several billions of data points. Unfortunately, these pointcloud datasets are not well suited for traditional modeling and viewing applications. It is therefore important to create simplified polygonal models which maintain the original photographic information through the use of color textures. One such approach is to use RANSAC plane detection to find features such as walls, floors and ceilings. Unfortunately, as shown in this paper, while standard RANSAC works well for smaller data sets, it fails when datasets become large. We present a novel method for finding feature planes in large datasets. Our method uses a hierarchical approach which breaks the dataset into subcomponents that can be processed more efficiently. These subsets are then reconfigured to find larger subcomponents, until final candidate points can be found. The original data set is then used along with the candidate points to generate final planar textual information. The Hierarchical Plane Extraction (HPE) method is able to achieve results in a fraction of the time of the standard RANSAC approach with generally higher quality results.

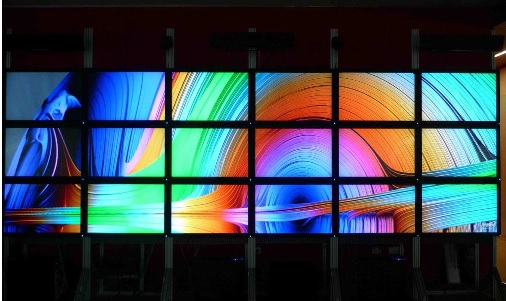

Assessing exertions: How an increased level of immersion unwittingly leads to more natural behavior

Virtual Reality (VR), 2014 iEEE,

107-108

This paper utilizes muscle exertions as a means to affect and study the behavior of participants in a virtual environment. Participants performed a simple lifting task both physically using an actual weight and virtually. In the virtual environment participants were presented with two different types of virtual presentation methods, one in which the weights were shown as a 3D model in the Immersive Visuals scenario and one in which the weights were shown as a simple line in the bland scenario. In the virtual scenarios, the object is only lifted when the participant’s muscle activity, measured by surface EMG, exceeds a calibrated minimum level as described in previous literature. We found that while participants were able to perceive the difference for various weights both physically and virtually, we found no significant differences in the perceived efforts between the presentation methods. However, while the participants subjectively indicated that their effort was the same for each of these presentation methods, we found significant differences in the muscle activity between the two virtual presentation methods. For all primary mover muscle groups and weights, the more immersive virtual presentation method led to exertions that were much more approximate to the exertions used for the physical weights.

Poster: Applying Kanban to healthcare via immersive 3D virtual reality

3D User Interfaces (3DUI), 2014 IEEE Symposium on

149-150

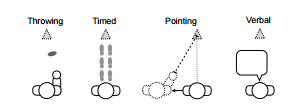

This project aims to enable the study of group process in simulated environments that are often inaccessible to physically study. We choose to study the inventory management technique, known as Kanban in a simulated operating room. A multi-viewer immersive 3D VR is used to simulate the scenario. Fourteen participants tested the 15-minute activity in the environment. Participants interacted with the system in pairs, each participant given their own unique viewpoint. We found that iVR is an acceptable tool to learn and apply Kaban. This project opens opportunities for other engineering techniques to be simulated before their application in industry.

Human Factors: The Journal of the Human Factors and Ergonomics Society

0018720814523067

Objective: In this study, we compared how users locate physical and equivalent three-dimensional images of virtual objects in a cave automatic virtual environment (CAVE) using the hand to examine how human performance (accuracy, time, and approach) is affected by object size, location, and distance.

Background: Virtual reality (VR) offers the promise to flexibly simulate arbitrary environments for studying human performance. Previously, VR researchers primarily considered differences between virtual and physical distance estimation rather than reaching for close-up objects.

Method: Fourteen participants completed manual targeting tasks that involved reaching for corners on equivalent physical and virtual boxes of three different sizes. Predicted errors were calculated from a geometric model based on user interpupillary distance, eye location, distance from the eyes to the projector screen, and object.

Results: Users were 1.64 times less accurate (p < .001) and spent 1.49 times more time (p = .01) targeting virtual versus physical box corners using the hands. Predicted virtual targeting errors were on average 1.53 times (p < .05) greater than the observed errors for farther virtual targets but not significantly different for close-up virtual targets.

Conclusion: Target size, location, and distance, in addition to binocular disparity, affected virtual object targeting inaccuracy. Observed virtual box inaccuracy was less than predicted for farther locations, suggesting possible influence of cues other than binocular vision.

Application: Human physical interaction with objects in VR for simulation, training, and prototyping involving reaching and manually handling virtual objects in a CAVE are more accurate than predicted when locating farther objects.

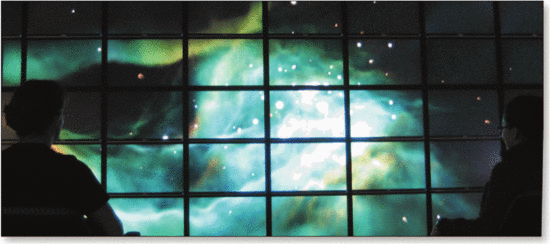

RSVP: Ridiculously Scalable Video Playback on Clustered Tiled Displays

Multimedia (ISM), 2013 IEEE International Symposium on

vol., no., pp.9,16, 9-11 Dec. 2013

This paper introduces a distributed approach for playback of video content at resolutions of 4K (digital cinema) and well beyond. This approach is designed for scalable, high-resolution, multi-tile display environments, which are controlled by a cluster of machines, with each node driving one or multiple displays. A preparatory tiling pass separates the original video into a user definable n-by-m array of equally sized video tiles, each of which is individually compressed. By only reading and rendering the video tiles that correspond to a given node’s viewpoint, the computation power required for video playback can be distributed over multiple machines, resulting in a highly scalable video playback system. This approach exploits the computational parallelism of the display cluster while only using minimal network resources in order to maintain software-level synchronization of the video playback. While network constraints limit the maximum resolution of other high-resolution video playback approaches, this algorithm is able to scale to video at resolutions of tens of millions of pixels and beyond. Furthermore the system allows for flexible control of the video characteristics, allowing content to be interactively reorganized while maintaining smooth playback. This approach scales well for concurrent playback of multiple videos and does not require any specialized video decoding hardware to achieve ultra-high resolution video playback.

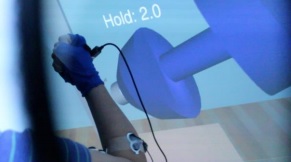

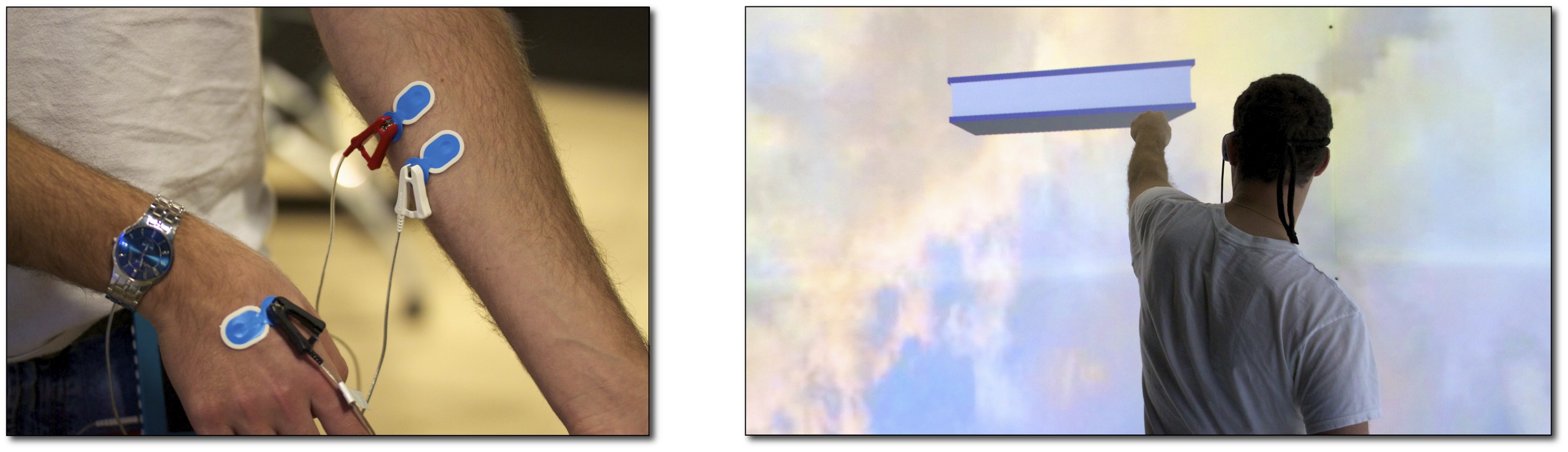

Virtual Exertions Physical Interactions in a Virtual Reality CAVE for Simulating Forceful Tasks

Proceedings of the Human Factors and Ergonomics Society Annual Meeting

v. 57, n. 1, pp. 967-971,2013

This paper introduces the concept of virtual exertions, which utilizes real-time feedback from electromyograms (EMG), combined with tracked body movements, to simulate forceful exertions (e.g. lifting, pushing, and pulling) against projections of virtual reality objects. The user acts as if there is a real object and moves and contracts the same muscles normally used for the desired activities to suggest exerting forces against virtual objects actually viewed in their own hands as they are grasped and moved. In order to create virtual exertions, EMG muscle activity is monitored during rehearsed co-contractions of agonist/antagonist muscles used for specific exertions, and contraction patterns and levels are combined with tracked motion of the user’s body and hands for identifying when the participant is exerting sufficient force to displace the intended object. Continuous 3D visual feedback to the participant displays mechanical work against virtual objects with simulated inertial properties. A pilot study, where four participants performed both actual and virtual dumbbell lifting tasks, observed that ratings of perceived exertions (RPE), biceps EMG recruitment, and localized muscle fatigue (mean power frequency) were consistent with the actual task. Biceps and triceps EMG co-contractions were proportionally greater for the virtual case.

Perceptual calibration for immersive display environments

Visualization and Computer Graphics, IEEE Transactions on

19 (4), 691-700

The perception of objects, depth, and distance has been repeatedly shown to be divergent between virtual and physical environments. We hypothesize that many of these discrepancies stem from incorrect geometric viewing parameters, specifically that physical measurements of eye position are insufficiently precise to provide proper viewing parameters. In this paper, we introduce a perceptual calibration procedure derived from geometric models. While most research has used geometric models to predict perceptual errors, we instead use these models inversely to determine perceptually correct viewing parameters. We study the advantages of these new psychophysically determined viewing parameters compared to the commonly used measured viewing parameters in an experiment with 20 subjects. The perceptually calibrated viewing parameters for the subjects generally produced new virtual eye positions that were wider and deeper than standard practices would estimate. Our study shows that perceptually calibrated viewing parameters can significantly improve depth acuity, distance estimation, and the perception of shape.

Poster: Say it to see it: A speech based immersive model retrieval system

3D User Interfaces (3DUI), 2013 IEEE Symposium on,

181-182

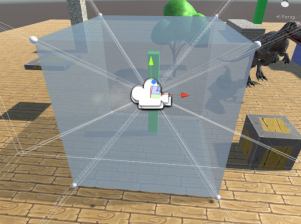

Traditionally, 3D models used within a virtual reality application must reside locally on the system in order to be properly loaded and displayed. These models are often manually created using traditional 3D design applications such as Autodesk products or Trimble SketchUp. Within the past five to 10 years, web-based repositories of 3D models, such as the Trimble 3D Warehouse, have become available for sharing 3D models with other users around the world. PC speech recognition capabilities have also become much more commonplace and accessible to developers, particularly with the advent of the Microsoft Kinect. We’ve developed a method to perform a search of an online model repository, the Trimble 3D Warehouse, from within our fully immersive CAVE by using speech. Search results are displayed within the CAVE, and the user can select a model he or she wishes to download and see drop into the CAVE. The newly acquired model can then be instantly manipulated and placed within the user’s current scene at a desired location.

SculptUp: A rapid, immersive 3D modeling environment

3D User Interfaces (3DUI), 2013 IEEE Symposium on,

199-200

Modeling complex objects and effects in mainstream graphics applications

is not an easy task. Typically it takes a user months or

years to adapt to the comprehensive interfaces in order to make

compelling computer graphics. Consequently, there is an imposing

barrier to entry for novice users to fully utilize three-dimensional

graphics as an artistic medium. We present SculptUp, an immersive

modeling system that makes generating complex CGI easier

and faster via an interaction paradigm that resembles real-world

sculpting and painting.

Visual analytics of inherently noisy crowdsourced data on ultra high resolution displays

Aerospace Conference, 2013 IEEE,

1-8

The increasing prevalence of distributed human microtasking, crowdsourcing, has followed the exponential increase in data collection capabilities. The large scale and distributed nature of these microtasks produce overwhelming amounts of information that is inherently noisy due to the nature of human input. Furthermore, these inputs create a constantly changing dataset with additional information added on a daily basis. Methods to quickly visualize, filter, and understand this information over temporal and geospatial constraints is key to the success of crowdsourcing. This paper present novel methods to visually analyze geospatial data collected through crowdsourcing on top of remote sensing satellite imagery. An ultra high resolution tiled display system is used to explore the relationship between human and satellite remote sensing data at scale. A case study is provided that evaluates the presented technique in the context of an archaeological field expedition. A team in the field communicated in real-time with and was guided by researchers in the remote visual analytics laboratory, swiftly sifting through incoming crowdsourced data to identify target locations that were identified as viable archaeological sites.

Cultivating Imagination: Development and Pilot Test of a Therapeutic Use of an Immersive Virtual Reality CAVE

AMIA Annual Symposium Proceedings 2013,

135

As informatics applications grow from being data collection tools to platforms for action, the boundary between what constitutes informatics applications and therapeutic interventions begins to blur. Emerging computer-driven technologies such as virtual reality (VR) and mHealth apps may serve as clinical interventions. As part of a larger project intended to provide complements to cognitive behavioral approaches to health behavior change, an interactive scenario was designed to permit unstructured play inside an immersive 6-sided VR CAVE. In this pilot study we examined the technical and functional performance of the CAVE scenario, human tolerance of immersive CAVE experiences, and explored human imagination and the manner in which activity in the CAVE scenarios varied by an individual’s level of imagination. Nine adult volunteers participated in a pilot-and-feasibility study. Participants tolerated 15 minute long exposure to the scenarios, and navigated through the virtual world. Relationship between personal characteristics and behaviors are reported and explored.

Online real-time presentation of virtual experiences forexternal viewers

Proceedings of the 18th ACM symposium on Virtual reality software and technology

45-52

Externally observing the experience of a participant in a virtual environment is generally accomplished by viewing an egocentric perspective. Monitoring this view can often be difficult for others to watch due to unwanted camera motions that appear unnatural and unmotivated. We present a novel method for reducing the unnaturalness of these camera motions by minimizing camera movement while maintaining the context of the participant’s observations. For each time-step, we compare the parts of the scene viewed by the virtual participant to the parts of the scene viewed by the camera. Based on the similarity of these two viewpoints we next determine how the camera should be adjusted. We present two means of adjustment, one which continuously adjusts the camera and a second which attempts to stop camera movement when possible. Empirical evaluation shows that our method can produce paths that have substantially shorter travel distances, are easier to watch and maintain the original observations of the participant’s virtual experience.

Envisioning the future of home care: applications of immersive virtual reality.

Studies in health technology and informatics 192,

599-602

Accelerating the design of technologies to support health in the home requires 1) better understanding of how the household context shapes consumer health behaviors and (2) the opportunity to afford engineers, designers, and health professionals the chance to systematically study the home environment. We developed the Living Environments Laboratory (LEL) with a fully immersive, six-sided virtual reality CAVE to enable recreation of a broad range of household environments. We have successfully developed a virtual apartment, including a kitchen, living space, and bathroom. Over 2000 people have visited the LEL CAVE. Participants use an electronic wand to activate common household affordances such as opening a refrigerator door or lifting a cup. Challenges currently being explored include creating natural gesture to interface with virtual objects, developing robust, simple procedures to capture actual living environments and rendering them in a 3D visualization, and devising systematic stable terminologies to characterize home environments.

Effective replays and summarization of virtual experiences

Visualization and Computer Graphics, IEEE Transactions on

18 (4), 607-616

Direct replay of the experience of a user in a virtual environment is difficult for others to watch due to unnatural camera motions. We present methods for replaying and summarizing these egocentric experiences that effectively communicate the user’s observations while reducing unwanted camera movements. Our approach summarizes the viewpoint path as a concise sequence of viewpoints that cover the same parts of the scene. The core of our approach is a novel content-dependent metric that can be used to identify similarities between viewpoints. This enables viewpoints to be grouped by similar contextual view information and provides a means to generate novel viewpoints that can encapsulate a series of views. These resulting encapsulated viewpoints are used to synthesize new camera paths that convey the content of the original viewer’s experience. Projecting the initial movement of the user back on the scene can be used to convey the details of their observations, and the extracted viewpoints can serve as bookmarks for control or analysis. Finally we present performance analysis along with two forms of validation to test whether the extracted viewpoints are representative of the viewer’s original observations and to test for the overall effectiveness of the presented replay methods.

Virtual Exertions: a user interface combining visual information

3D User Interfaces (3DUI), 2012 IEEE Symposium on,

85-88

Virtual Reality environments have the ability to present users with rich visual representations of simulated environments. However, means to interact with these types of illusions are generally unnatural in the sense that they do not match the methods humans use to grasp and move objects in the physical world. We demonstrate a system that enables users to interact with virtual objects with natural body movements by combining visual information, kinesthetics and biofeedback from electromyograms (EMG). Our method allows virtual objects to be grasped, moved and dropped through muscle exertion classification based on physical world masses. We show that users can consistently reproduce these calibrated exertions, allowing them to interface with objects in a novel way.

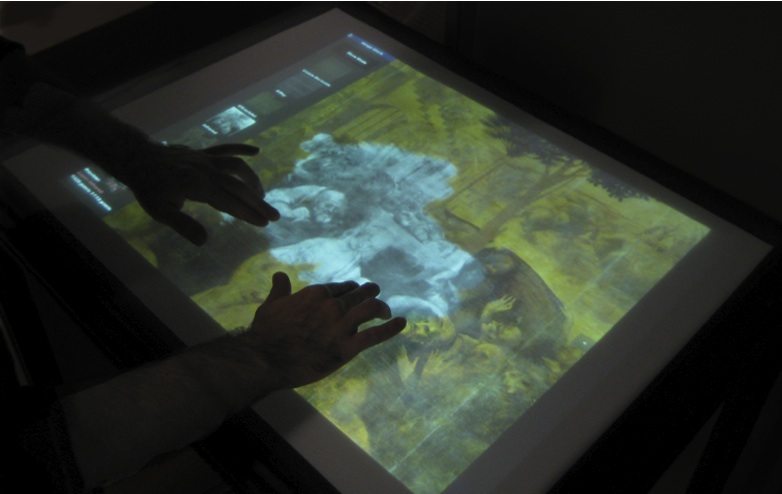

CGLXTouch: A multi-user multi-touch approach for ultra-high-resolution collaborative workspaces

Future Generation Computer Systems

27 (6), 649-656

This paper presents an approach for empowering collaborative workspaces through ultra-high resolution tiled display environments concurrently interfaced with multiple multi-touch devices. Multi-touch table devices are supported along with portable multi-touch tablet and phone devices, which can be added to and removed from the system on the fly. Events from these devices are tagged with a device identifier and are synchronized with the distributed display environment, enabling multi-user support. As many portable devices are not equipped to render content directly, a remotely scene is streamed in. The presented approach scales for large numbers of devices, providing access to a multitude of hands-on techniques for collaborative data analysis.

System for inspection of large high-resolution radiography datasets

Aerospace Conference, 2011 IEEE,

1-9

High-resolution image collections pose unique challenges to analysts tasked with managing the associated data assets and deriving new information from them. While significant progress has been made towards rapid automated filtering, alignment, segmentation, characterization, and feature identification from image collections, the extraction of new insights still strongly depends on human intervention. In real-time capture and immediate-mode analysis environments where image data has to be continuously and interactively processed, a broad set of challenges in the image-driven verification and analysis cycle have to be addressed. A framework for interactive and intuitive inspection of large, high-resolution image data sets is presented, leveraging the strength of the human visual system for large-scale image processing. A case study is provided for an X-ray radiography system, covering the scanner-to-screen data management and representation pipeline, resulting in a visual analytics environment enabling analytical reasoning by means of interactive and intuitive visualization.

Interactive image fusion in distributed visualization environments

Aerospace Conference, 2011 IEEE,

1-7

This paper presents an immediate-mode, integrated approach to image fusion and visualization of multi-band satellite data, drawing from the computational resources of networked, high-resolution, tiled display environments. The presented workflow enables researchers to intuitively and interactively experiment with all tunable parameters, exposed through external devices such as MIDI controllers, to rapidly modify the image processing pipeline and resulting visuals. This presented approach demonstrates significant savings across a set of case studies, with respect to overall data footprint, processing and analysis time complexity.

The future of the CAVE

Central European Journal of Engineering 1 (1),

16-37

The CAVE, a walk-in virtual reality environment typically consisting of 4–6 3 m-by-3 m sides of a room made of rear-projected screens, was first conceived and built in 1991. In the nearly two decades since its conception, the supporting technology has improved so that current CAVEs are much brighter, at much higher resolution, and have dramatically improved graphics performance. However, rear-projection-based CAVEs typically must be housed in a 10 m-by-10 m-by-10 m room (allowing space behind the screen walls for the projectors), which limits their deployment to large spaces. The CAVE of the future will be made of tessellated panel displays, eliminating the projection distance, but the implementation of such displays is challenging. Early multi-tile, panel-based, virtual-reality displays have been designed, prototyped, and built for the King Abdullah University of Science and Technology (KAUST) in Saudi Arabia by researchers at the University of California, San Diego, and the University of Illinois at Chicago. New means of image generation and control are considered key contributions to the future viability of the CAVE as a virtual-reality device.

Giga-stack: A method for visualizing giga-pixel layered imagery on massively tiled displays

Future Generation Computer Systems 26 (5),

693-700

In this paper, we present a technique for the interactive visualization and interrogation of multi-dimensional giga-pixel imagery. Co-registered image layers representing discrete spectral wavelengths or temporal information can be seamlessly displayed and fused. Users can freely pan and zoom, while swiftly transitioning through data layers, enabling intuitive analysis of massive multi-spectral or time-varying records. A data resource aware display paradigm is introduced which progressively and adaptively loads data from remote network attached storage devices. The technique is specifically designed to work with scalable, high-resolution, massively tiled display environments. By displaying hundreds of mega-pixels worth of visual information all at once, several users can simultaneously compare and contrast complex data layers in a collaborative environment.

DIGI-vis: Distributed interactive geospatial information visualization

Aerospace Conference, 2010 IEEE,

1-7

Geospatial information systems provide an abundance of information for researchers and scientists. Unfortunately this type of data can usually only be analyzed a few megapixels at a time, giving researchers a very narrow view into these voluminous data sets. We propose a distributed data gathering and visualization system that allows researchers to view these data at hundreds of megapixels simultaneously. This system allows scientists to view real-time geospatial information at unprecedented levels expediting analysis, interrogation, and discovery.

Wipe-Off: an intuitive interface for exploring ultra-large multi-spectral data sets for cultural heritage diagnostics

Computer Graphics Forum (2009),

v. 28, n. 8, pp. 2291-2301.

A visual analytics technique for the intuitive, hands-on analysis of massive, multi-dimensional and multi-variate data is presented. This multi-touch-based technique introduces a set of metaphors such as wiping, scratching, sandblasting, squeezing and drilling, which allow for rapid analysis of global and local characteristics in the data set, accounting for factors such as gesture size, pressure and speed. A case study is provided for the analysis of multi-spectral image data of cultural artefacts. By aligning multi-spectral layers in a stack, users can apply different multi-touch metaphors to investigate features across different wavelengths. With this technique, flexibly definable regions can be interrogated concurrently without affecting surrounding data.

VideoBlaster: a distributed, low-network bandwidth method for multimedia playback on tiled display systems

Multimedia, 2009. ISM’09. 11th IEEE International Symposium on,

201-206

Tiled display environments present opportune workspaces for displaying multimedia content. Often times, displaying this kind of audio/visual information requires a substantial amount of network bandwidth. In this paper we present a method for distributing the decoding over the entire display environment, allowing for substantially less network overhead. This method also allows for interactive configuration of the visual workspace. The method described scales independently of video frame size and produces lower latency compared to streaming approaches.

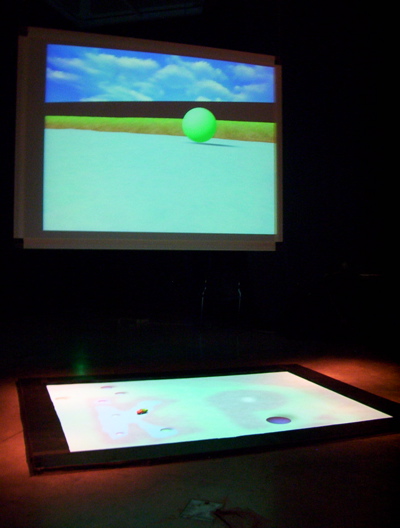

Tangled reality

Virtual Reality 12 (1),

37-45

Leonardo da Vinci was a strong advocate for using sketches to stimulate the human imagination. Sketching is often considered to be integral to the process of design, providing an open workspace for ideas. For these same reasons, children use sketching as a simple way to express visual ideas. By merging the abstraction of human drawings and the freedom of virtual reality with the tangibility of physical tokens, Tangled Reality creates a rich mixed reality workspace. Tangled Reality allows users to build virtual environments based on simple colored sketches and traverse them using physical vehicles overlayed with virtual imagery. This setup allows the user to “build” and “experience” mixed reality simulations without ever touching a standard computer interface.

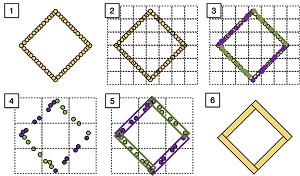

Virtual Bounds: a teleoperated mixed reality

Virtual Reality 10 (1),

41-47

This paper introduces a mixed reality workspace that allows users to combine physical and computer-generated artifacts, and to control and simulate them within one fused world. All interactions are captured, monitored, modeled and represented with pseudo-real world physics. The objective of the presented research is to create a novel system in which the virtual and physical world would have a symbiotic relationship. In this type of system, virtual objects can impose forces on the physical world and physical world objects can impose forces on the virtual world. Virtual Bounds is an exploratory study allowing a physical probe to navigate a virtual world while observing constraints, forces, and interactions from both worlds. This scenario provides the user with the ability to create a virtual environment and to learn to operate real-life probes through its virtual terrain.