Accomplishments:

As a group in this week we really started getting into our project.

– We tested the Emotive system and software- so much fun- We also started to become more familiar with the software by reading the software instruction

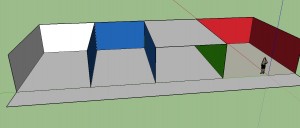

– We tried to finalize the number of rooms and make color decision

– Furniture or none? We’ll test both on ourselves first. We’re leaning towards neutral furniture such as in a cruise ship cabin.

– Furniture will color same as wall

– We’ll design 4 rooms off of a central room and allow the participant to walk between the rooms at will. We’ll measure how much time they spend in each room.

– white, red, blue, green will be the colors we’ll test. We discussed trying yellow to cover the primary colors, but decided it was too unusual a room color given the saturation we wish to test.

– Using sketch-up, we made our sample room.

– We made an appointment with a research method professor for the next week in order to get help for drafting the right survey questions, and reached out to the emeritus professor of the class “Color Theory: Environmental Context”

– We came up with some idea to solidify interactive activity of the subject while in the environment

– Tell participants task at hand in advance

– “Which hotel room would you most enjoy having for your vacation?”

– Integrate controller training in lobby

– Participant will verbally say their preference – numbered

– And will offer an adjective for their favorite room

– we will use standard prompt if indecisive

– finally, we’ll compare the results vs. a survey we give to people on paper showing color pictures of the room

We hope we will find out Does being in the immersive environment change people’s preferences for room color?

Next Week’s Plans:

– Testing the Emotive system – what does it add to our study? How would we make it work with test subjects?

– Complete Draft of experiment design

– Complete Draft survey question

– Working on the interactive activity of the subject while in the environment

Note: post written by Soheila Mohamadi