Kinects are generally used to obtain depth maps of an environment using their speckle pattern reflection of infrared light and a color pattern similar to a video camera. This data is used to analyze the position and movement of human body thus giving a virtual reality gaming environment. Contrary to the above traditional use of Kinects, they can be used to obtain 3D Point Clouds similar to the usage of a LiDaR scanner. The striking difference between the Kinect and LiDaR scanning would be that a Kinect could be moved in any direction and still continues to obtain the Point Cloud Data while the LiDaR scanner has to be kept stationary during its operation. The Point Cloud Data obtained can be simultaneously or subsequently processed to enhance, reconstruct missing points and texturize the data obtained and can also serve as a map for SLAM (Simultaneous Localization and Mapping). The Point Cloud Library(PCL) and C++ are to be used almost for the entire process. OpenCV, OpenNI are the next likely frameworks.

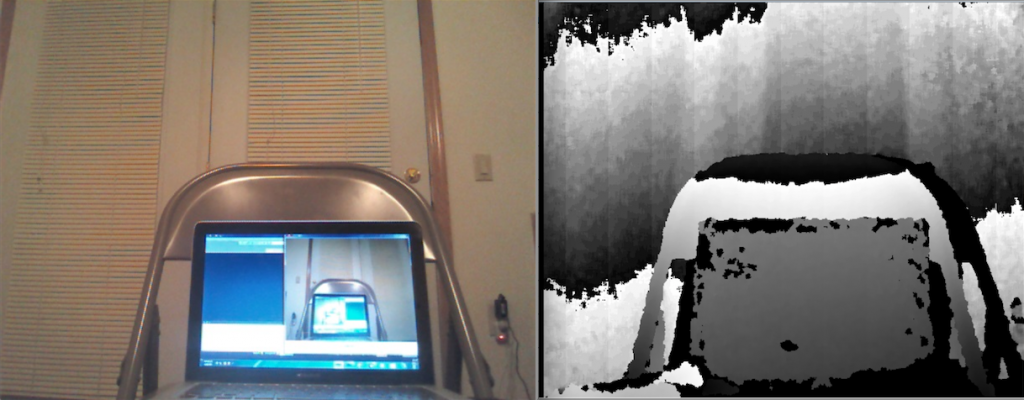

And to get this project kicked off, I got Windows, Visual Studio and Kinect SDK installed on my Mac (It took much longer than I thought). With a little help from the Kinect SDK apps, I was able to obtain the color and depth maps of my laptop inside my laptop inside my laptop inside. . .

Voila.!

From here, the next step would be obtain a continuous point cloud from the Kinect which would get us something like a 3D image. And from there, it all looks exciting.

Naveen