What started as a project that would reconstruct point cloud data through Kinect Fusion SDK along with PCL had its limitations that led me to take a detour to getting Time Varying Datasets and finally landing in the Kinect Fusion SDK.

There was a very steep initial learning curve with respect to setting up the drivers and the software. My Macbook was not supporting the Kinect drivers as it had a low end graphics card and I had to use Dr.Ponto’s Alienware laptop with an NVIDIA GTX780 graphics card that was pretty fast. Compiling PCL and its dependencies, OpenNI and PrimeSense was the next step which had a few issues of their own while interacting with Windows drivers. These initial phase was very frustrating as I have not really coded much in the Windows OS and had to figure out how to setup the hardware, drivers and software. It was almost mid-March when I had the entire setup running without crashing in the middle.

Although it was a late start, once the drivers and the software were setup, it was all exciting and fast. I was able to obtain datasets automatically using the OpenNI Grabber interface. I had to just specify the time interval between successive captures and the program saved them as PCD files (Color and Depth). It wasn’t late until I was able to get 400 PCDs of a candle burning down with PCDs captured 1 second apart from each other which would give a realistic 3D rendering of the scenario. The viewer program was pretty similar that took in the number of PCD files and the time interval between the display as arguments and visualized these 3D datasets.

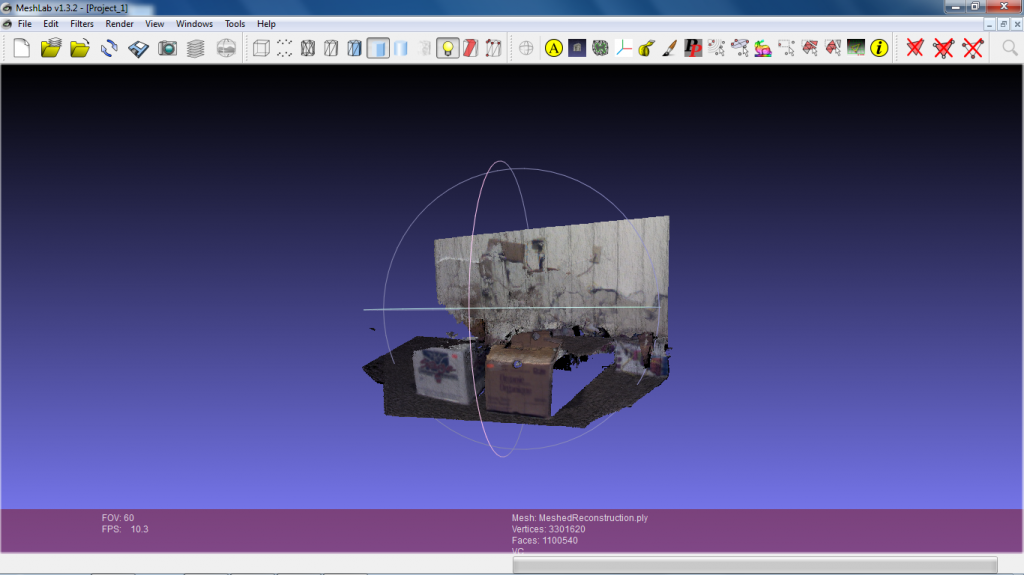

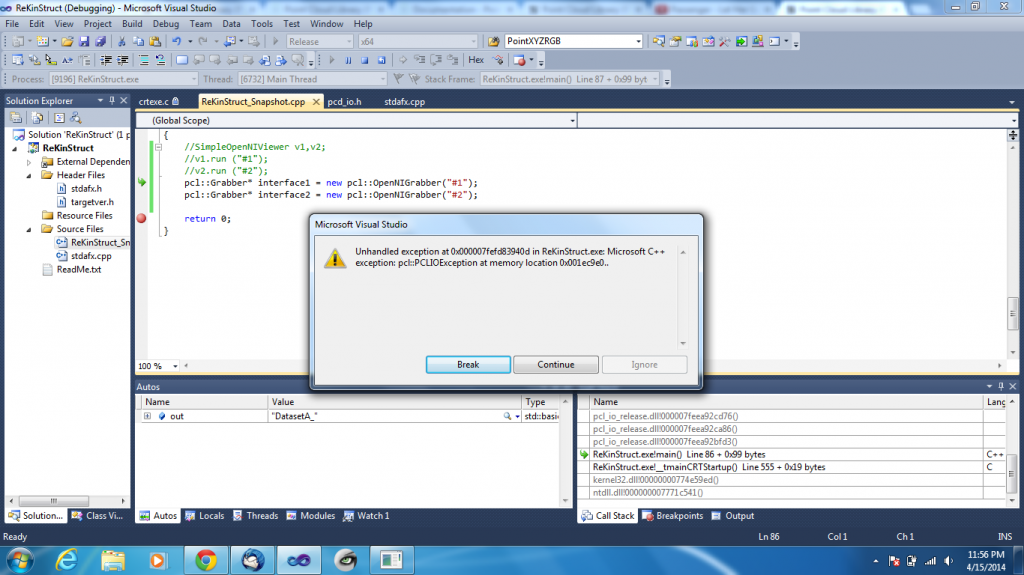

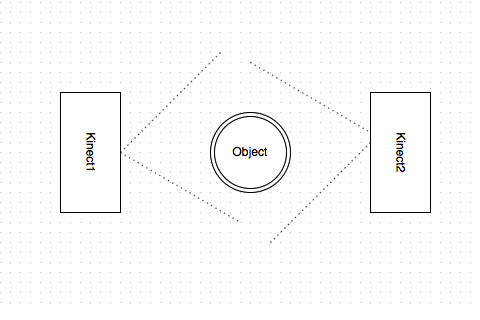

Further on, I also tried learning the Windows SDK that is provided along with the Kinect. The Kinect Fusion basics is a beautifully written piece of code that obtains PLYs when scanned with the Kinect. PCL offers options to convert these into PCDs which was the desired final format. I also tried running multiple Kinects simultaneously to obtain data that would fill in the shadow points of one Kinect but I was not able to debug an error while Windows SDK’s Multi Static Cameras option. Given more time or as a future work, I believe using multiple Kinects to obtain PCD files would be a good area to explore. Working on these obtained PCD files like hole filling and reconstruction would also be good topics to cover in the future.

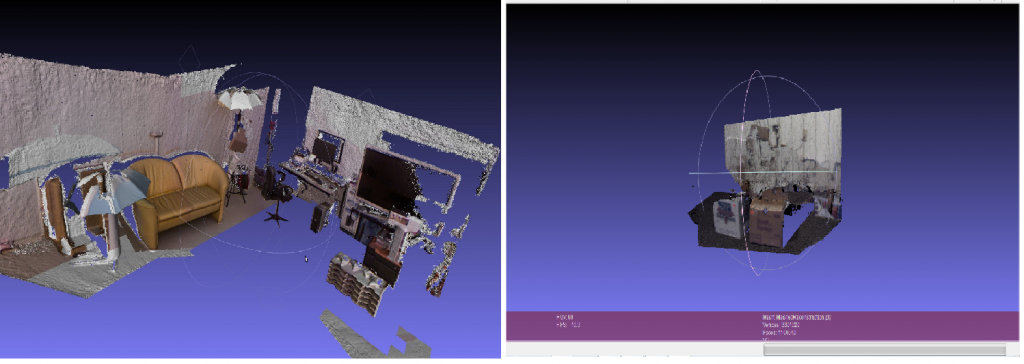

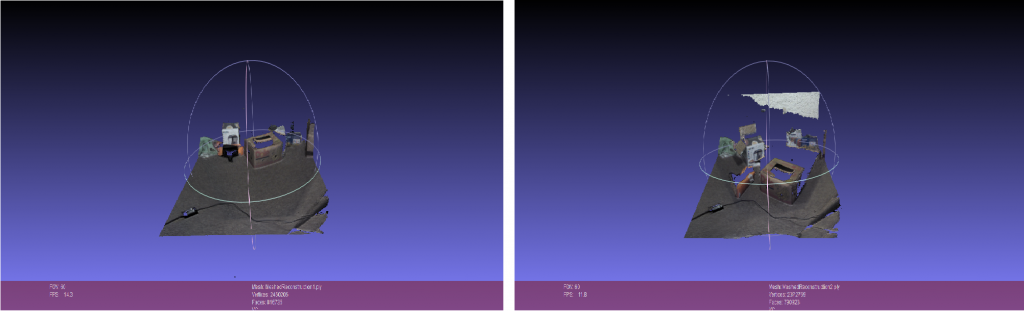

Here is a comparison of the image that is used in pointclouds.org(the one I put up in my second post as a target) against the image that I have obtained. Both are screenshots of PLYs.

Left: Mesh from pointclouds.org; Right: Mesh obtained by me

The datasets can be found at:

Candle: https://uwmadison.box.com/s/j1zheh8b46fbxjs079xs

Walking Person: https://uwmadison.box.com/s/lxkr7a7io5rbz84xy4uw

Office: https://uwmadison.box.com/s/8mfccacpewkptymicx67

All in all, I am happy with the progress of the work. If the drivers were not a big hindrance, I would have had a better start in the beginning of the semester. Nevertheless, it was a great learning experience and an interesting area of study.