(For some reason embedding images is not working, but images are visible on clicking)

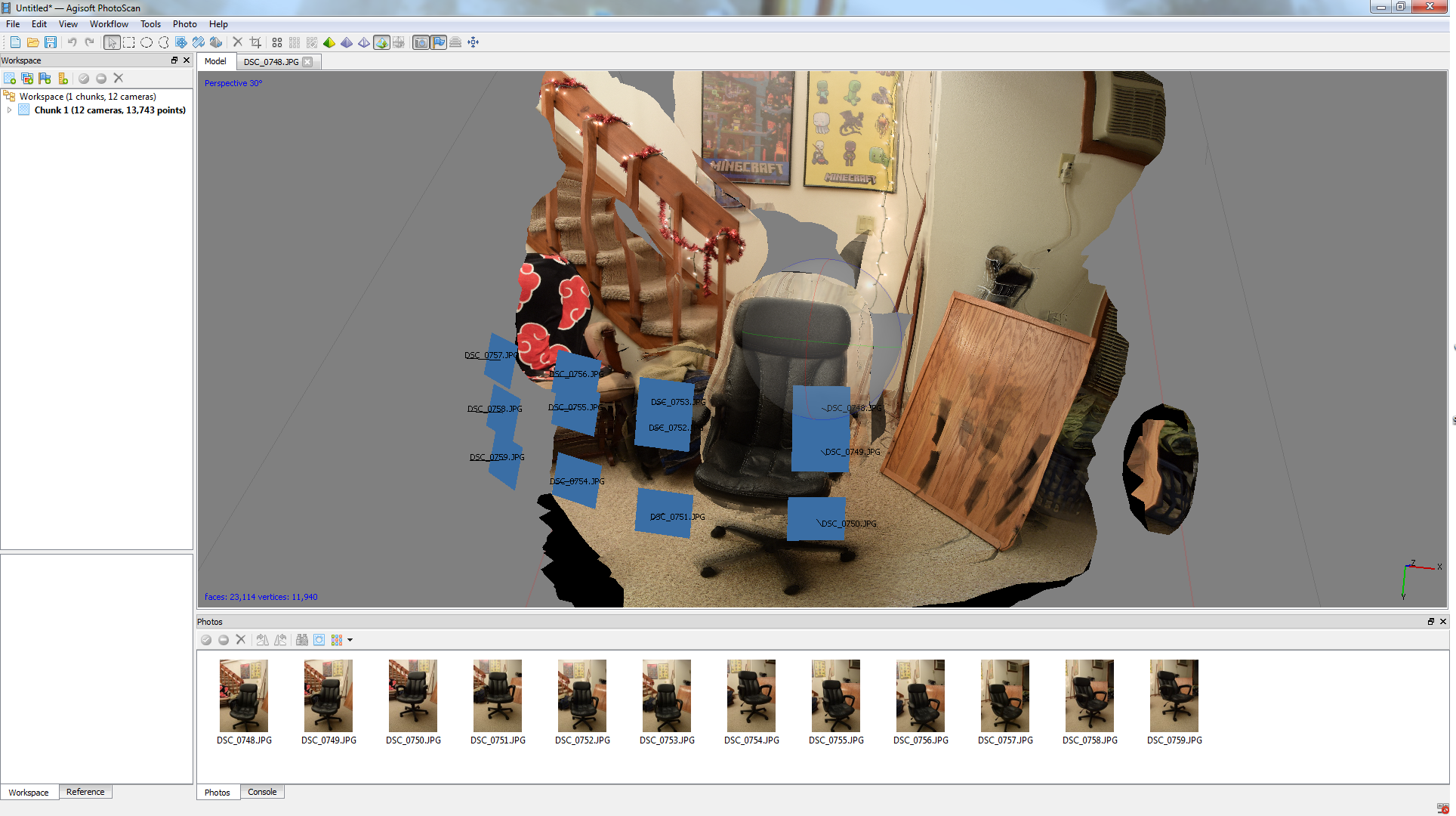

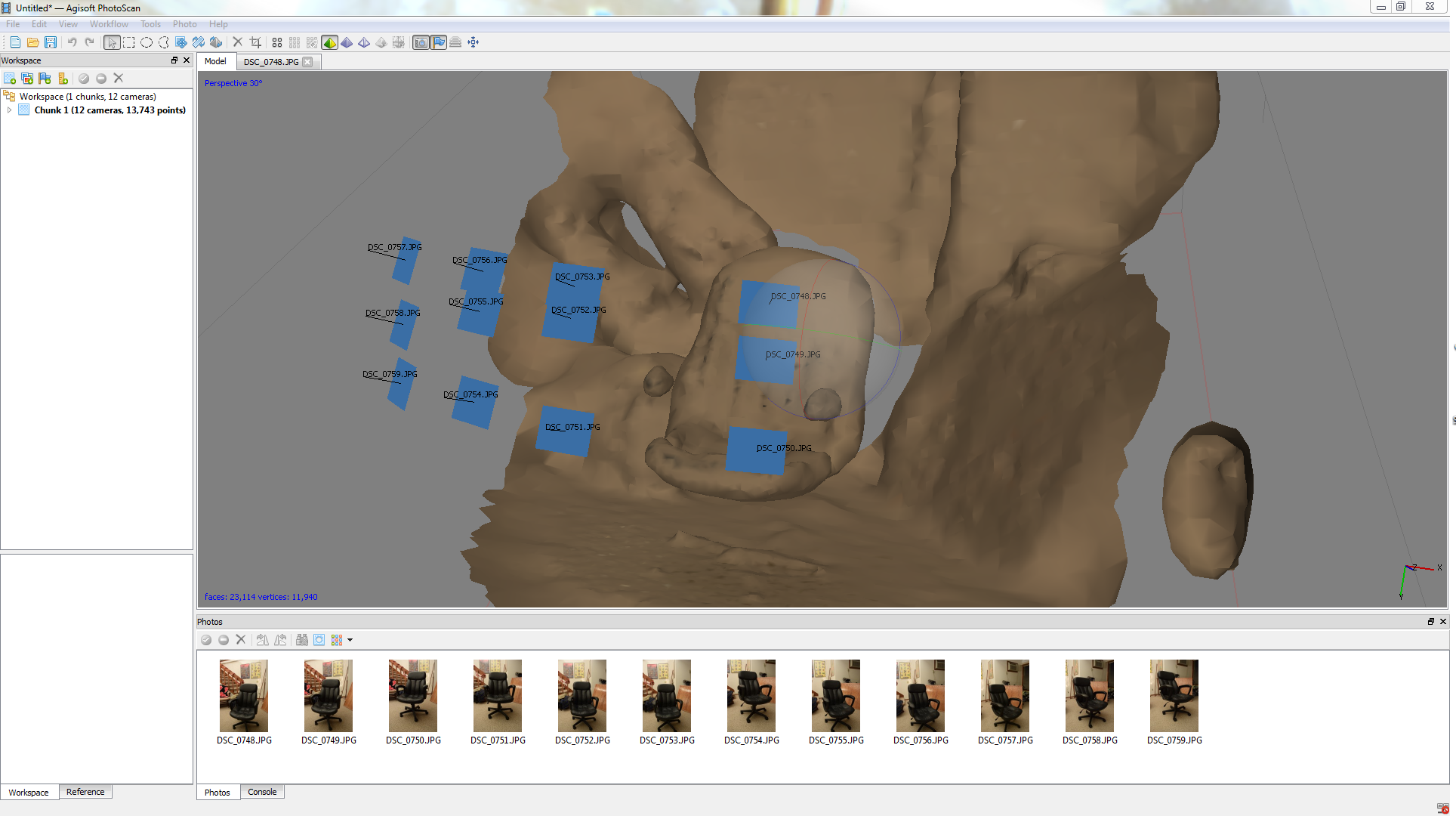

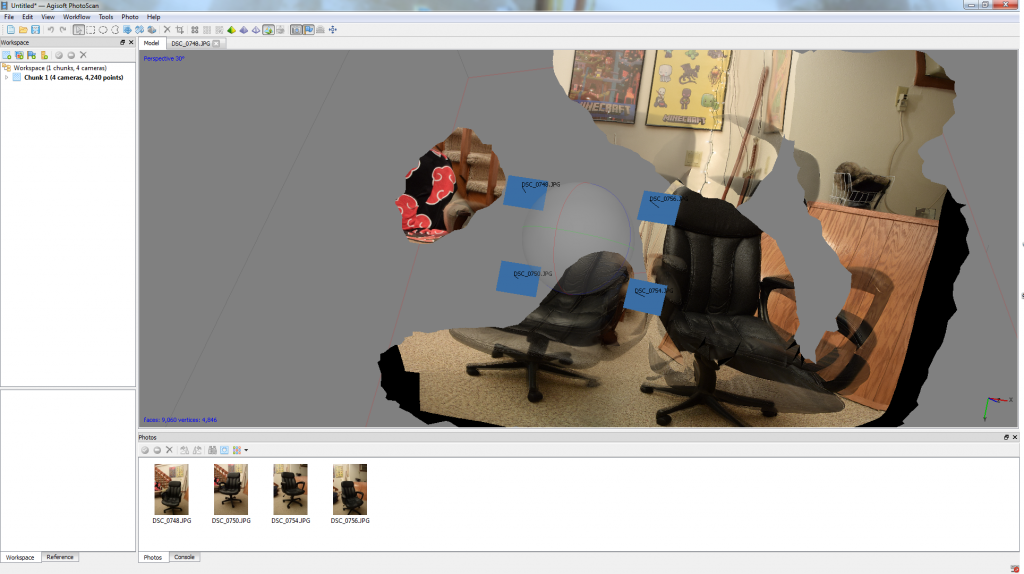

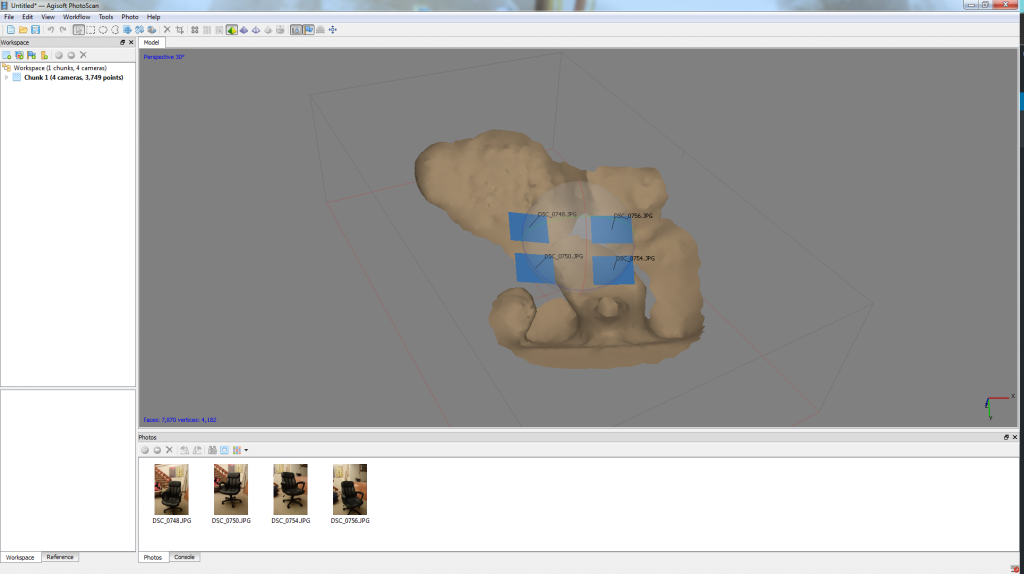

We wanted to look at how the sparseness of the photo angles affected the resulting model. The goal of this was to simulate the possible camera configurations we would have for high speed setup. Given that a 360 model would require many more cameras than we have available we decided to target only one side of the model to maximize overlap. We found that the best results could be obtained via close camera angles and multiple elevations, however these were not sufficient to preserve much detail in the final model. This is most evident in the base model, once it is textured the models tend to look deceptively better. Dense point clouds are very computationally intensive to generate. For a 3D model with 9060 faces the dense point cloud is over 10,000,000 points. We also found that separation from the background was difficult to maintain with the few angles.

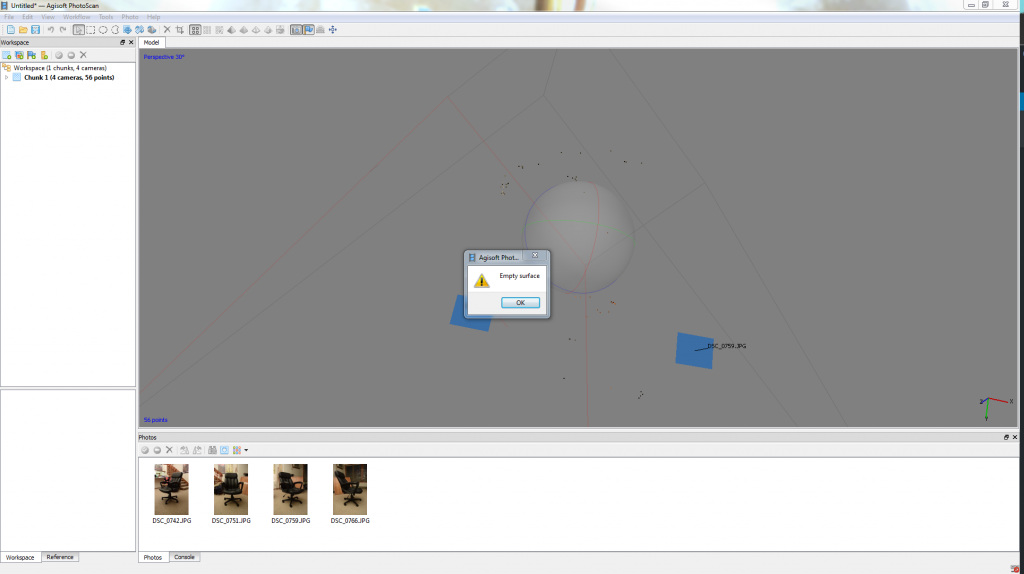

With 4 images from the same elevation we were only able to get 56 points of correlation and no surface formation.

The feasibility of highs speed capture with any significant detail has been called in to question with these experiments. The high resolution of the source images (14MP) as well as the good exposure of the images leads to sub par results in maintaining detail in the model. Thus with the much lower resolution and difficult exposure of high speed capture leads us to suspect that models produced in such a manner would have very limited to no ability to discern detail.

We would like to meet up with Kevin at some point to discuss possible different project goals.