First, I’d like to apologize for not posting more frequently. I’ve run into some architectural issues that I’m trying to figure out before I move forward and start coding.

When trying to create a solution that has to interact with multiple different frameworks and platforms, things can start to get complicated. Let’s break things down and look at the options that we have for each.

Backend Framework

Game Engine

Unity Engine

Unreal Engine

Input Solution

Text-to-speech

Voice Messages

Sending emoji (pictures)

Thankfully, we can take one factor out of the equation right from the start. I will be implementing everything through the Unity gaming engine. I will also be targeting the HTC Vive because of it’s great resolution and natural controllers.

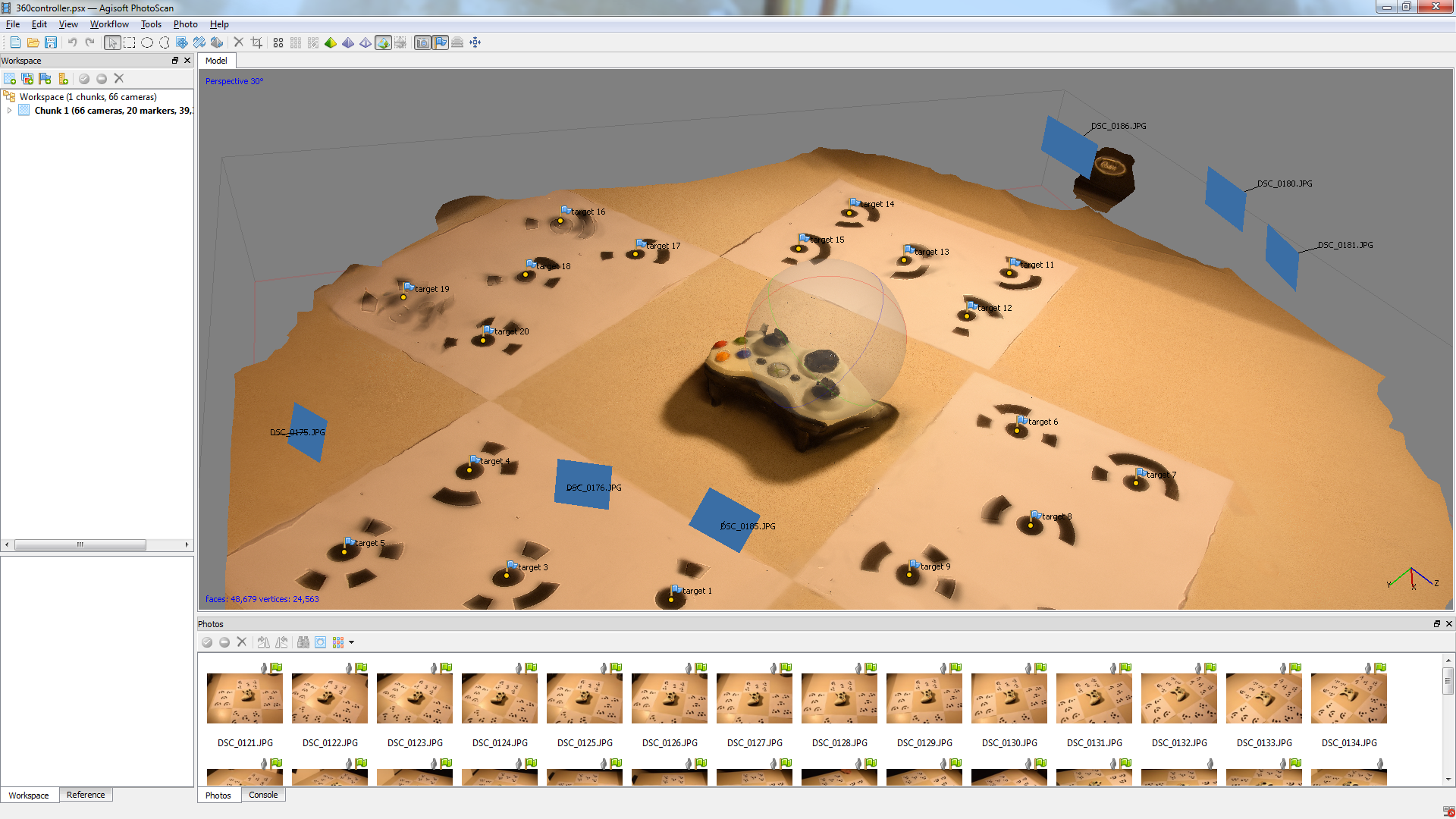

Originally, I had wanted to test a system that wanted to test a variety of chatting methods in a lightweight way in a virtual environment. I think that for the sake of time and scope over the semester, I will focus on sending emoji and other images, mostly because of the fact that emoji is an emerging form of communication and I think it’s effects in a VR setting might show to be quote interesting.

When I was researching what was possible with emoji and a lightweight, over the internet P2P solution, I ran into a couple of issues. Currently, Apple’s version of emoji are the most ubiquitous and up to date of all of the current Emoji typefaces. Emoji standards and definitions are set by a Unicode. Companies and organizations create the font based off of these standards. If I were to continue using Apple Color Emoji, my best option would be to use exported PNG versions instead, as otherwise it would look different on each computer. I could also use EmojiOne, an open-source Emoji font as well. It will come down to whether or not sending text over a chat library is easier than sending images. I could also simply send a code between the users and assign that code to a corresponding PNG version when it reaches the second user. All are viable options at this point.

This next week I will be building a web-based version to try to test these different methods.

Until next time,

Tyler