Progress

This week I started building the unity project for the Landolt C test, as well as continuing to learn about optometry in an effort to better understand the underlying principles of what I’m doing. I started Tuesday by looking more into some of the studies mentioned in Varadharajan (which talks about how to asses and build a new logMAR chart). While it’s not directly pertinent to the Landolt C format, there are small details about assessing visual acuity tests that will prove useful for validation of the test once complete. Also I’m gaining a better sense of how to navigate the subject of optometry as I continue looking for things more related to Landolt C.

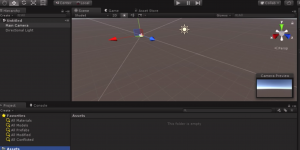

The progress on the test itself is going smoothly. Tuesday I used Photoshop to produce images for Landolt C. and E tests, then imported them into unity and got them pasted onto a viewing plane. For now the structure of the test in unity remains fairly simple. A single camera points at a viewing plane with the texture on it.

By the end of my time Friday, I completed the functionality of the test for the on screen viewing environment, essentially reproducing a basic version of the FrACT application. Unlike the FrACT application I’m using a light grey C on an all black background, as this theoretically eliminates the issue of lighting the scene.

As it stands now, the test does the following:

- Presents a Landolt C to the user

- Reads a directional input

- Records the actual rotational position of the C

- Records the user’s choice for rotational position of the C

- Rotates, and Repositions the C for the next trial

Struggles

I spent a decent amount of time fighting with the low resolution C texture for the viewing plane. I was getting some strange pixels appearing around the edge of the C even after adjusting the usual suspects for fixing that (max texture size, bilinear -> point, shader type). The issue ended up being that the image I was using as the texture, being based on a 5 x 5 grid was a non power of two image. Changing the power of two texture setting to ‘none’ in unity fixed the pixel noise around the C.

Aside from some short code debugging the only other thing I’ve noticed is that at great distances, the C object starts to render in odd ways on a pixel by pixel basis. Without anti-aliasing, something in the rendering pipeline is just deciding to change the dimensions of the C. This will definitely affect testing results, as the C becomes easier to read in certain positions at great distance than others. For now I have no clue how to solve this, I’m hoping that the distances actually needed to measure visual acuity and the resolution of the HMD’s will make this a non issue.

Next Week

For the next week I first plan to get the test working in the virtual environment on some headset. I’m most comfortable with the HTC system so I’ll probably use that. Once I’ve gotten the code adjusted for input from the Vive controller, I’ll start on the next form of the test.

While the current test uses a plane to display the C object, and the user is able to move their head, we also wanted to try a version where the Landolt C is ‘pasted’ directly onto the HMD display itself. This eliminates head motion.