1) Describe your final project.

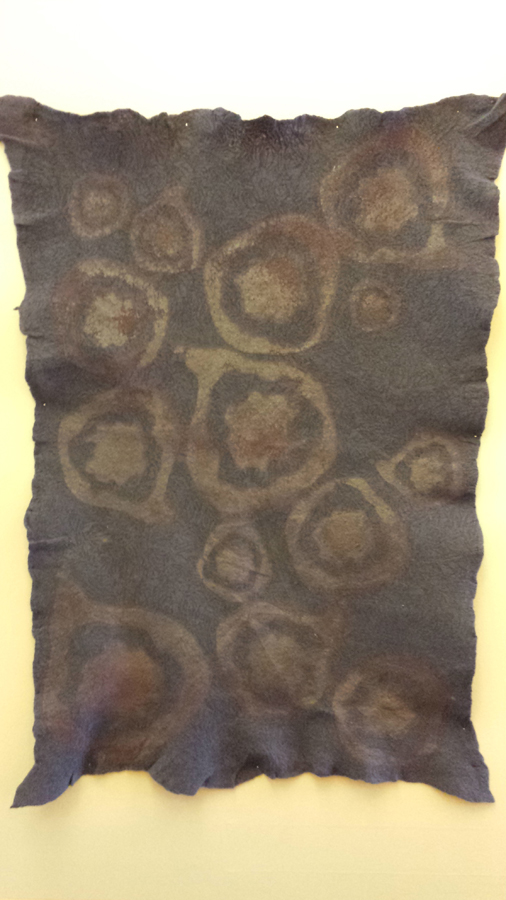

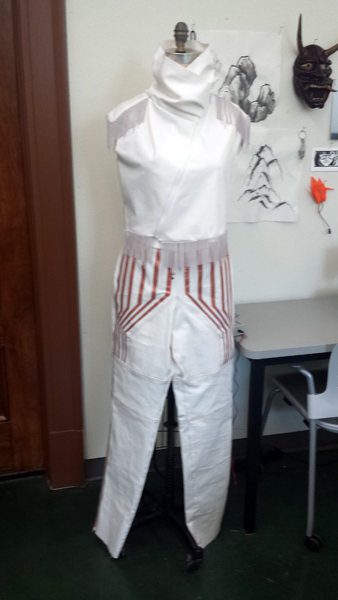

My final project is a white & copper vest and jeans outfit titled “Switch”. (Bonus points if you get the movie reference!) At this point it is incomplete, but in the final outcome the piece will have a series of integrated tilt sensors, created by copper beads on the vest’s fringe trim that contact copper taffeta strips appliqued to the pants. The activation of these sensors will trigger LED lights in the vest, creating an organically-generated pattern of their own.

At this moment, the vest and pants are about 85% complete from a base sewing perspective, I’ve completed a successful wiring test and have some basic code written that will be modified once all of the wiring is in place.

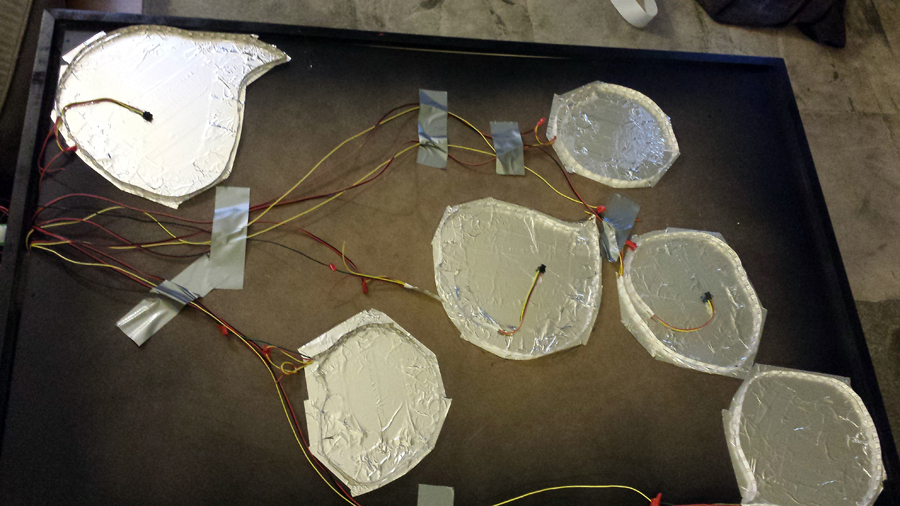

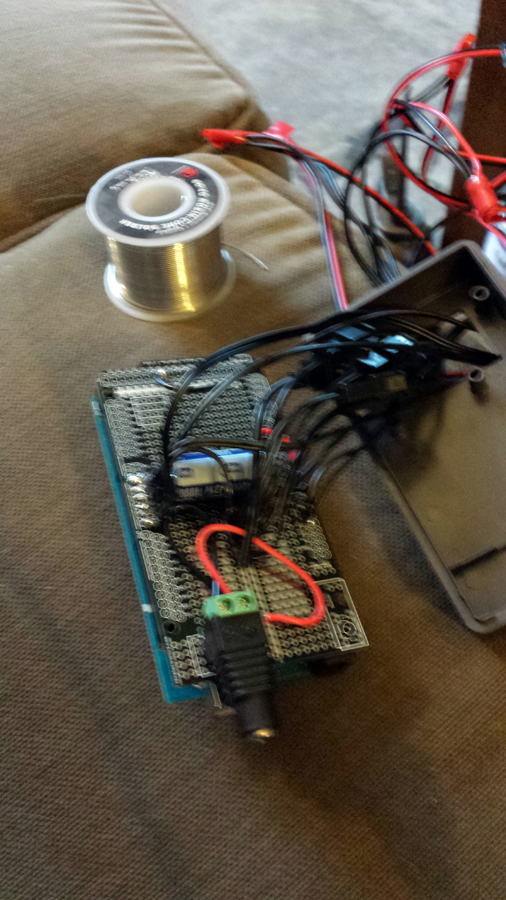

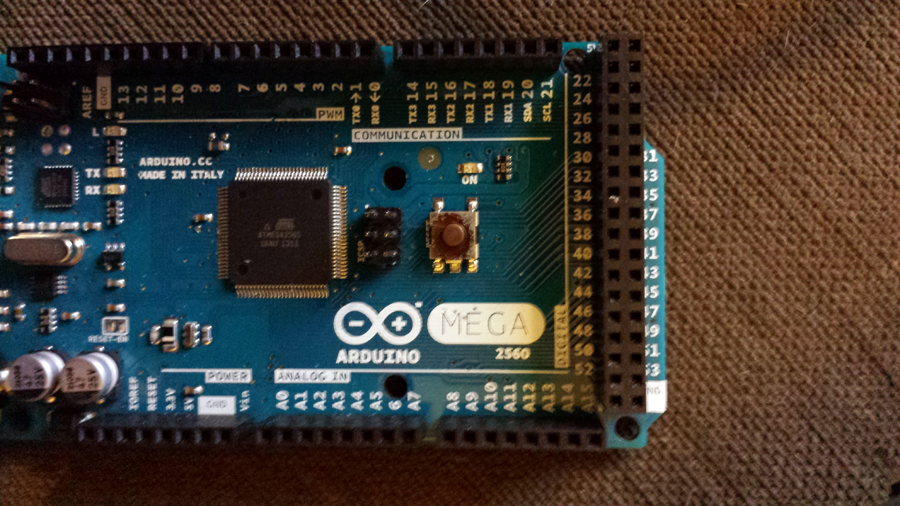

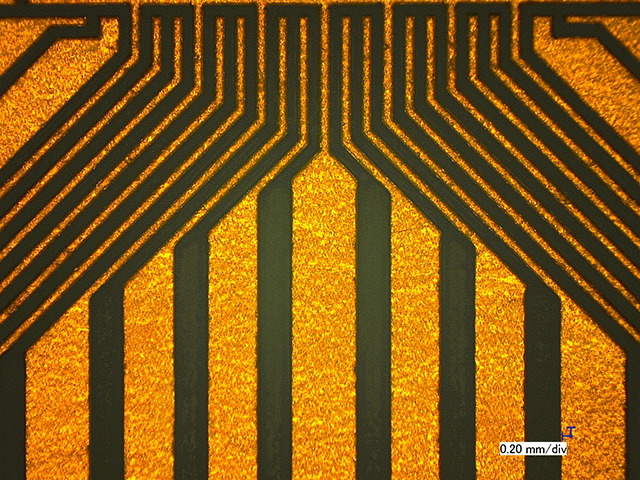

Tilt Switch Test

Tilt Switch Test

2) Describe your overall feelings about the project. Are you happy, content, frustrated, etc with the results?

While I’m disappointed to not be further along on my final piece, I am very excited about it’s potential. I think it will be a great conversation starter piece, as well as a foundational jumping off point for my work going forward.

3) What were some of the largest hurdles you encountered over the semester and how did you approach these challenges.

It seems like everything I attempted this semester ended up being a hurdle, often one that I didn’t clear. The amount of “failure” I experienced in my experimentation was frustrating, but I feel like I’ve learned a huge amount this semester. I gained some great learnings on the usefulness and limitations of new materials (copper taffeta & addressable LED strips = awesome! muscle wires = disappointing) that I will be able to apply to my work as I go forward.

The single most important thing I learned this semester is that I need to rethink my entire approach to projects, from a timeline and process perspective. I’m a very fast and capable seamstress, and I plan my project timelines accordingly. Normally, I’m able to produce a large quantity of work in a semester, without a lot of trial and error. Adding electronics has changed everything. I now need to plan in time for experimentation and, especially, failure. Accepting that thing will not always (if ever) work as anticipated the first time through has been a huge mental hurdle for me. Having learned this lesson, I can now plan my work more realistically in order to ensure a more favorable outcome.

4) If you had more time, what would you do next on your project?

Obviously, with more time I would finish my current project. That completion is currently my plan for summer. Overall, with more time I feel that I could have successfully completed that projects I attempted to their full potential, rather than being stymied at the last minute by unexpected roadblocks.