-Andrew Chase and Bryce Sprecher

Progress

In the last week, Bryce and I managed to try constructing 3D models with Agisoft Photoscan, to familiarize ourselves with the software. All in all, we were successful, and managed to find out what works well and what didn’t. Here’s our results:

(Click photos for a larger view)

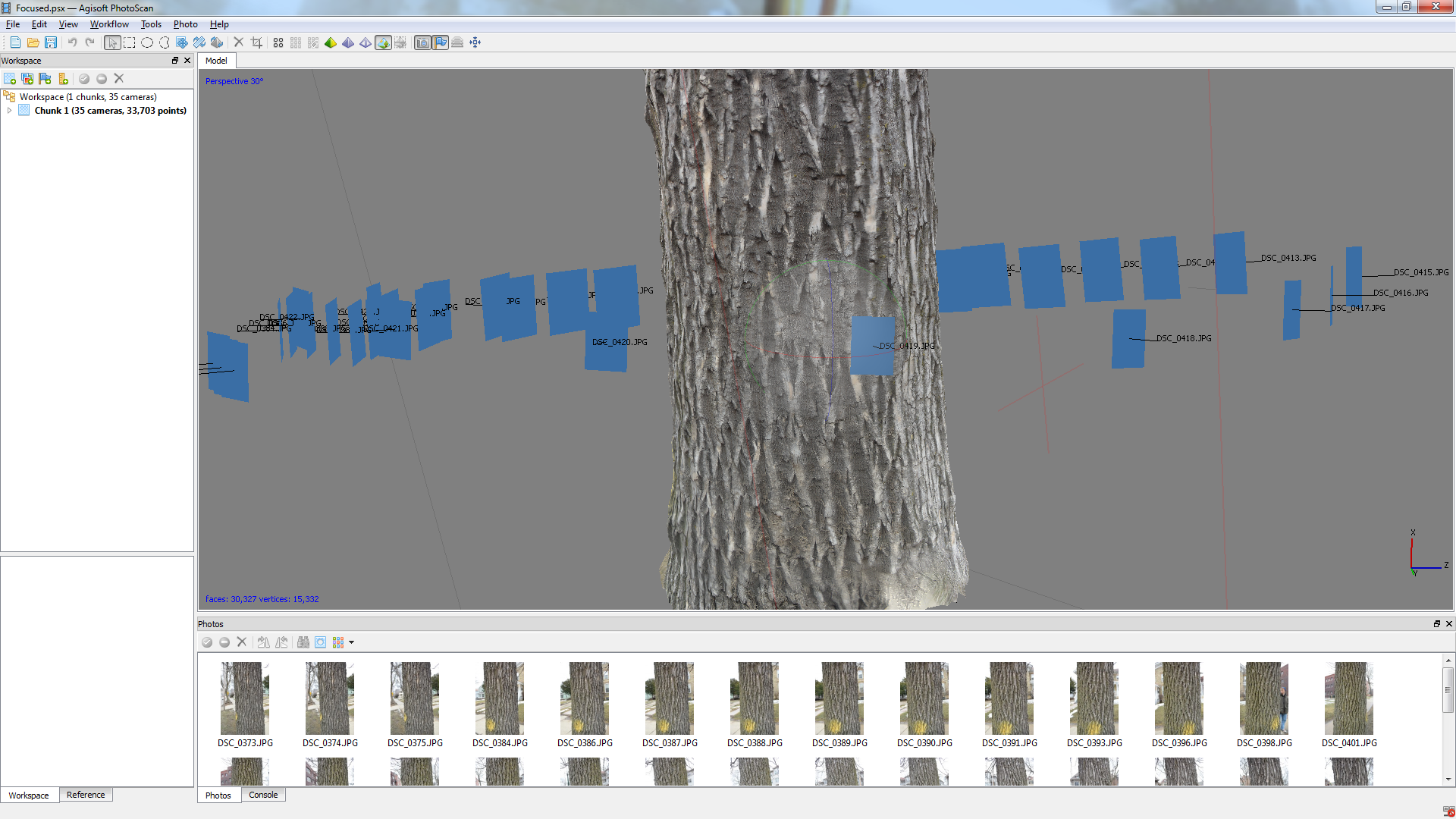

Outdoor lighting, or any environment with a lot of ambient light works best. The less shadows/reflection we have in the modeling subject, the better.

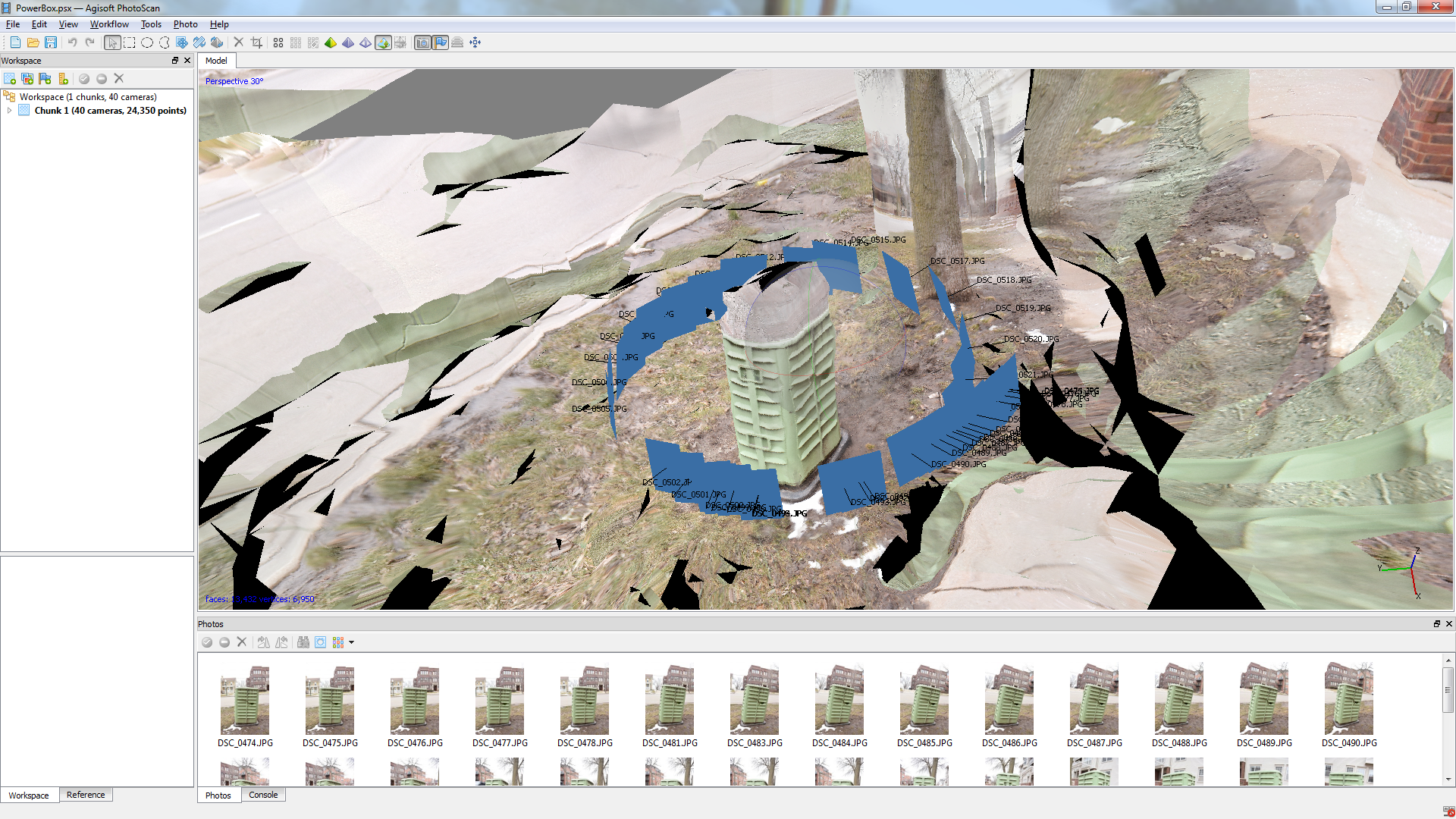

However, things can still go wrong, such as in this case when we tried to model a simple power box. However, this was rushed, to see how rigorous we needed to be in our photo collection, and it still turned out alright. One simple solution to this would be to just mask each image before aligning the photos, and we wouldn’t have background interference with the model.

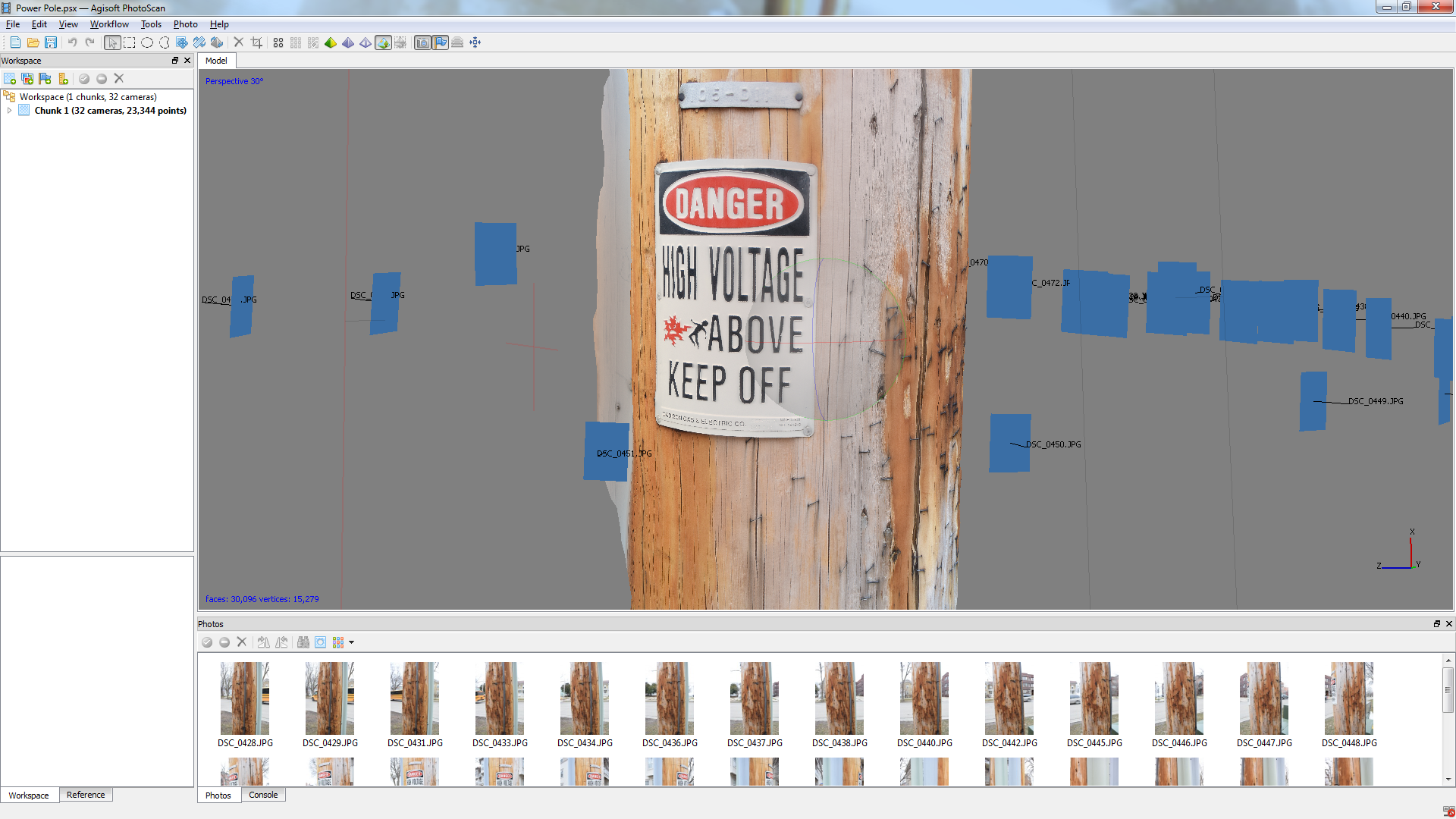

Texturing seems to work well with Photoscan, as seem by this power pole.

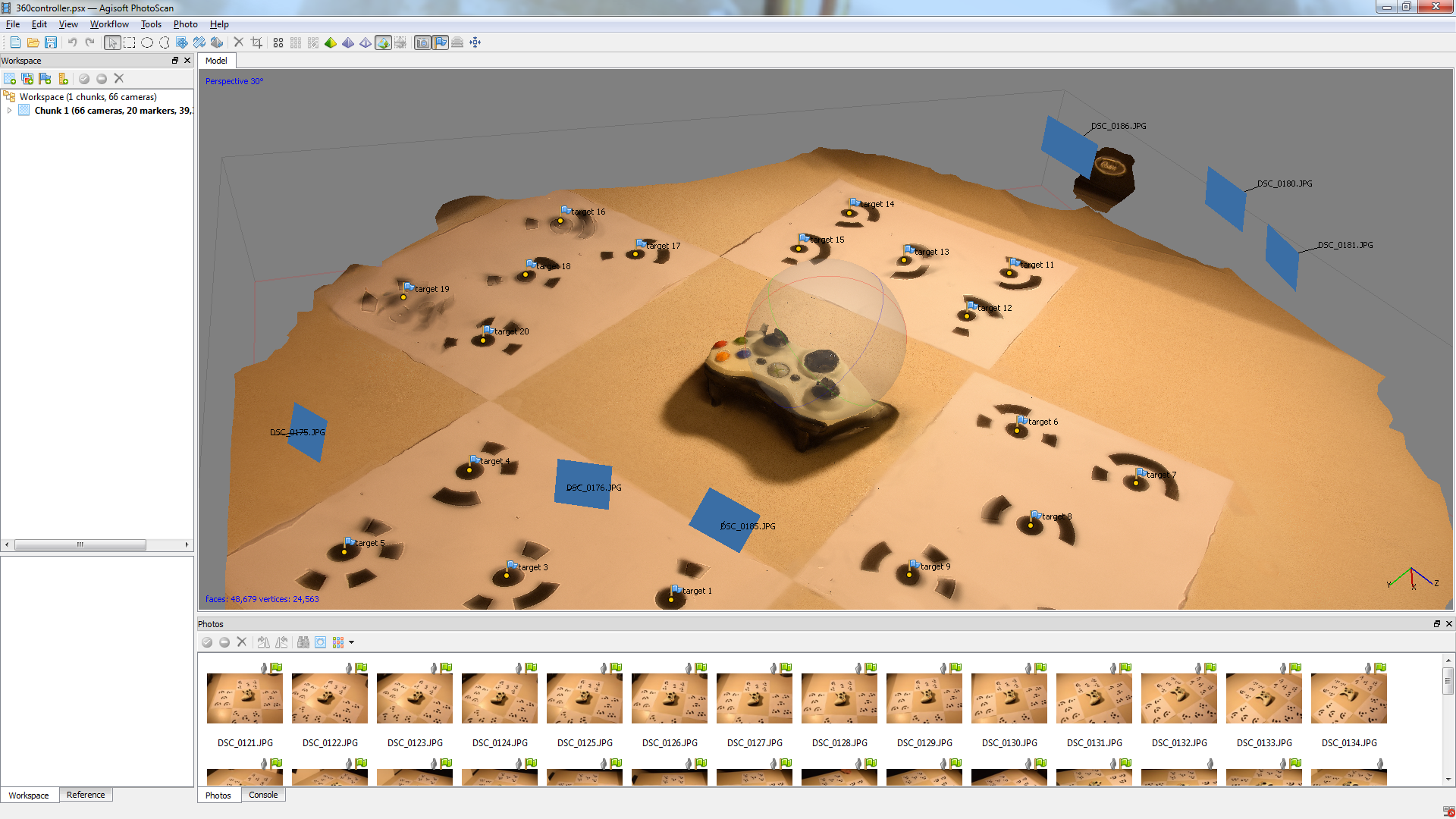

We’ve tried a few indoor models, and found that we need to have a better lighting set up (light boxes, as opposed to generic LED bulbs/halogen lights, to increase diffusion). This, and find a way to prop up our samples to allow below-horizon shots on the object, which we didn’t do on the controller example. We also tried a figurine model, but we had issues with it. It was a small object, so it leads us to believe that smaller objects require a more sophisticated set up. Bryce is very familiar with the camera (and knows a lot about optimal photography) at this point, and is able to adjust ISO sensitivity, shutter speed, and various other setting to optimize our data collection, so we are unsure why smaller objects are still difficult to model.

Next Steps

Our next goal is to investigate better lighting techniques, and see how we can construct a model with limited camera angles. If we are to model an object over time with only four cameras, for example, we need to see how the model can turn our with such limited perspective. If anything, we may need to create a “one-sided model”, where it is 3D from one side, but has no mesh construction on the other.

In addition, we plan to look into how to synchronize cameras for simultaneous capture. There is software/an app for this camera, and we plan to see what limitations there are on it. If the software cannot handle such a task, we will then look into camera how we can use post-processing to align and extract frames from each camera’s perspective, and create models from that.