For a while I was attempting to build visual stimulus in the Rift, but they always seemed off. Sense of depth was off, it seemed difficult to fuse the two images at more than one small region at a time, and there were lots of candidates for why:

- a miscalculation in the virtual eye position, resulting in the wrong binocular disparity

- a miscalibration of the physical Rift (the lens depth is deliberately adjustable, and there are multiple choices for lens cups; our DK1 also had a bad habit of letting the screen pop out of place up to maybe an inch on the right side)

- lack of multisampling causing a lack of sub-pixel information, which may be of particular importance considering the DK1’s low resolution

- incorrect chromatic abberation correction causing visual acuity to suffer away from image and lens center (which could have been separate, competing problems in the case of miscalibration)

- something wrong in the distortion shader, causing subtle, stereo-cue destroying misalignments

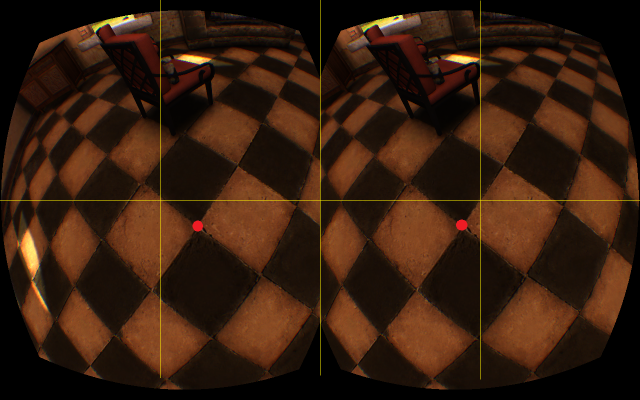

Here’s two images I used to test. In both images, I tried to center my view on on a single “corner” point of a grid pattern, marked with a red dot; image center is marked with yellow axes.

First, from the official OculusWorldDemo:

So, that’s roughly where we want our eye-center red dots to be, relative to image center.

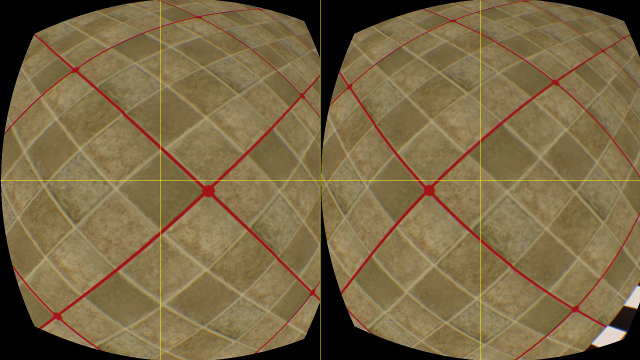

And from our code:

Which matches what I was experiencing with the undistorted images in the Rift — things were in almost the right places, but differences between the images seem exaggerated in not immediately coherent ways.

The key difference is in the shape of the individual eye’s images. In the image from my code, the shape of the right eye image is “flipped” relative to the official demo’s; or rather, our code hadn’t flipped the right eye’s coordinate system, relative to the left’s.

This is most apparent with the hard right edge — I’d written this off before as a mismatch in screen resolutions between PC and rift causing the screen to get cut off, but nope, turns out that lurking deep within OculusRoomTiny (the blueprint for our Rift integration), the center offset for the right eye was being inverted before being passed to the shader. This happened well away from the rest of the rendering code, so it was easy to miss.

I changed our code to match — and the difference was striking. Full stereo fusion came naturally, and general visual acuity and awareness of the scene was improved. And so was awareness of a whole host of new flaws in the scene — the lack of multisampling and screen door effect were much more apparent, tracking errors more annoying, and errors in recreations of realistic scenes far more in focus. It’s interesting how thoroughly the distortion misalignment dominated those other visual artifacts.

Related — the misaligned distortion may have been causing vertical disparity, which I hear is the main problem when using toe-in to create stereo pairs. Vertical disparity is accused of decreasing visual acuity; perhaps this takes the form of inattention, rather than blurriness, which would explain our suddenly becoming more attentive to other artifacts when the distortion shader was fixed. Maybe more in a future post.