This week was spent on getting the point splatting to work in our OOCViewer.

Right now, normals are calculated for each voxel independently. This can either be done during runtime or in a pre-processing step. In the first case, normals are cached on the disk after calculation and can be re-used. This also has the advantage that ‘mixed’ scenes are possible in which some voxels have normals but others dont:

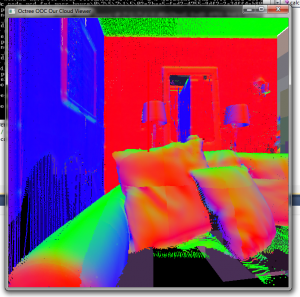

Online calculation of normals. Some voxels have normal data, others don’t. The voxels in the background right have not been loaded yet.

Calculation time depends mostly on the number of points in a voxel. pcl’s estimateNormals method turned out to be faster (especially when using the multi-threaded OMP variant) than the naive normal estimation approach and was used. In a second pass, a K-nearest neighbour search is performed for each point in the point cloud and the average distance to these neighbour points is used as a starting radius for the splat size.

The drawbacks are increased memory size. On average each pcd file now has an accompanying normal cache file that is 1.5 the size. Normal data is currently not compressed. Another option would be to store normal+radius data as 4 signed chars (32 bit total) and normalize the value in the shaders.

Pre-calc time is pretty high as there are many small files and a lot of time is spent onopening and closing files. On the other hand, this has to be performed only once.

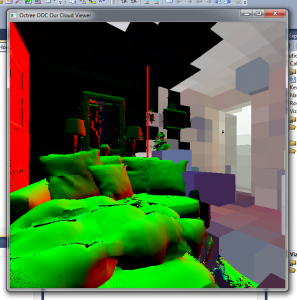

There are some sampling problems with the normals like in this image:

As a side note: merging two branches is harder than it should be. Maybe we could organize the git branches a bit better?