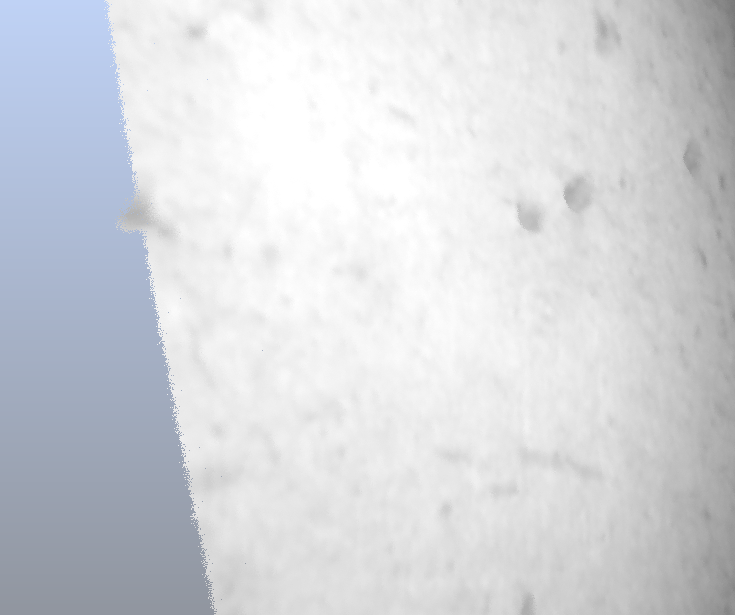

I scanned the concrete pillar in my office to see if we get resolution high enough to investigate small details — in this case the air bubbles and enclosures in the concrete, in the proposed project bullet holes and dents.

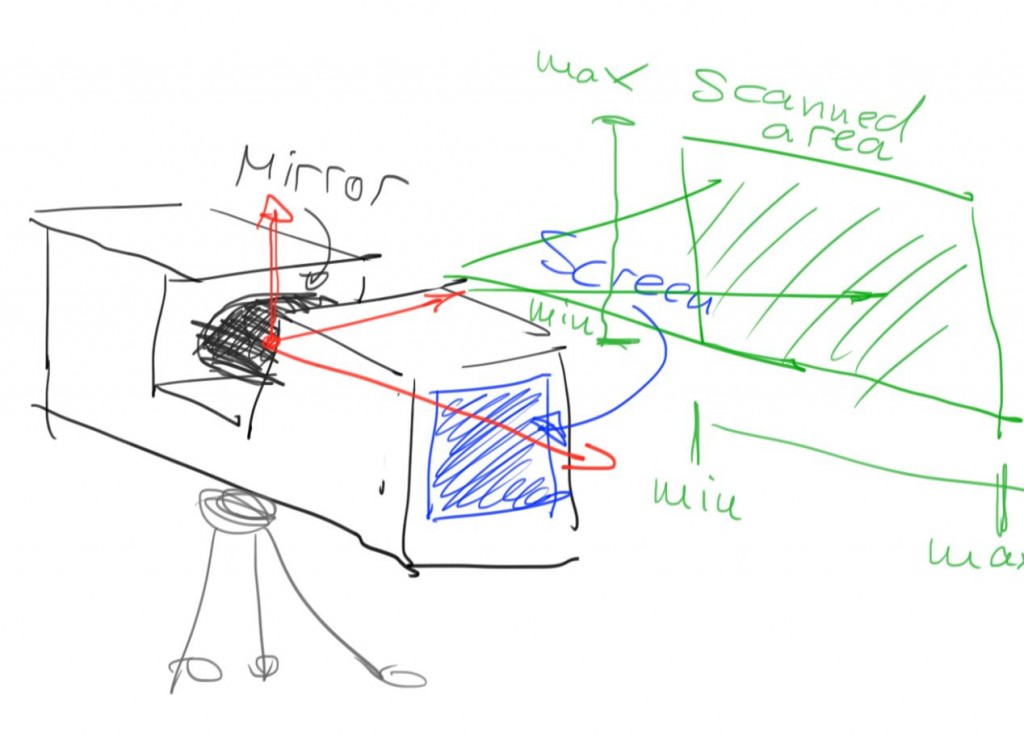

The distance between scanner and surface was about 1 foot and only a single scan was taken. In the scanner software I selected a small window. Unfortunately, the scanner does not tell which coordinate system this is based on, so I had to eyeball it. For further reference, this is how I think it looks:

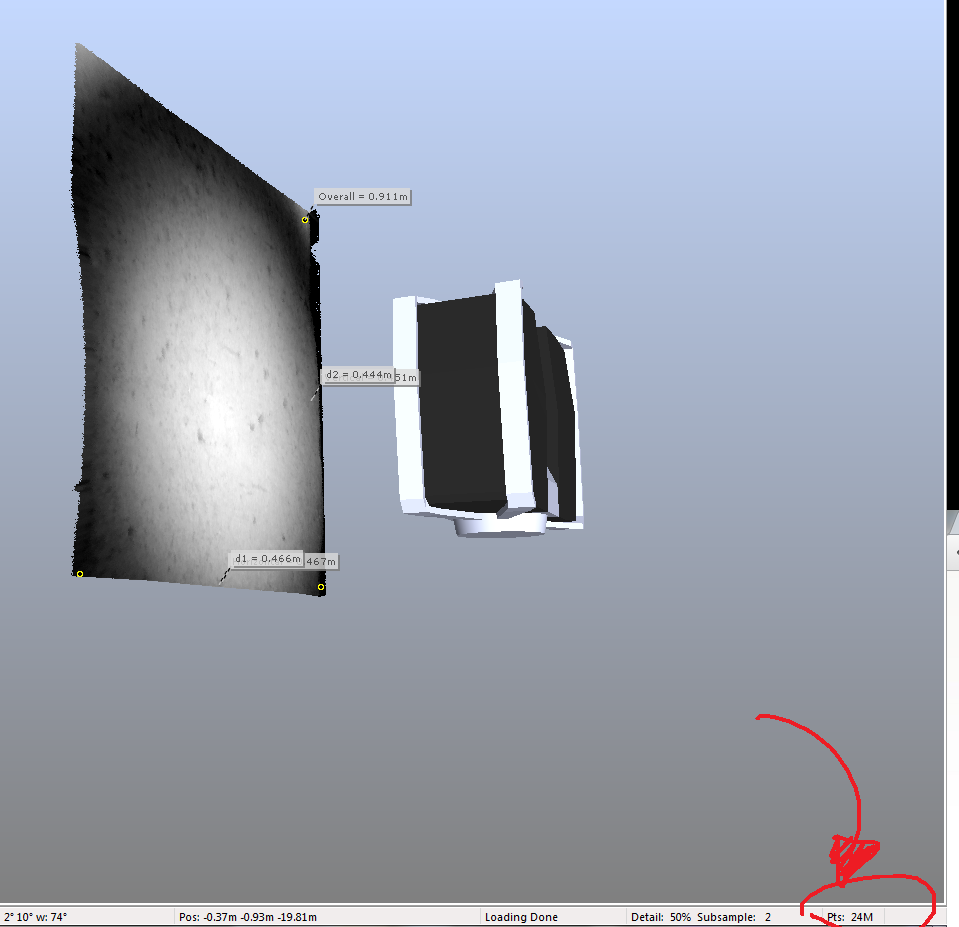

The scanning was set to the highest resolution and quality (4x supersampling?) settings and took about an hour — this is really only practical if we want to scan such a small area. Imported into SCENE, it shows up as about a one square-foot area containing about 24 million points:

The scan dataset is about 700MB in size. We get a theoretical resolution of 24M/(400*400)mm2 = 150 pts/mm2. That seems rather high. Note that with multiple scans and interleaved scan points this number would increase further.

On a similar note, SCENE breaks down at these small scales (I think it’s more designed for surveying) and has problems displaying them, even after setting the near plane to 0.01. Movement is also way too coarse to be useful at these small scales. This is as close as we can go:

The next steps will be exporting this data and displaying it with our software. Maybe it will be useful starting a small PCL close-up viewer, as the current octree generation is between 0.25m and 0.3m and would therefore put all points in a single voxel. An alternative could be increasing the scale of the scan data and multiplying the coordinates of all points by a fixed scale, eg. 100. That could create an octree hierarchy and we could use our out-of-core viewer for this data as well (plus all the other VR displays).