Oculus Rift DK2 and SDK 0.4.2 bring some exciting improvements, and some small problems.

The SDK has switched to a new C API, which probably means restructuring our existing Rift code.

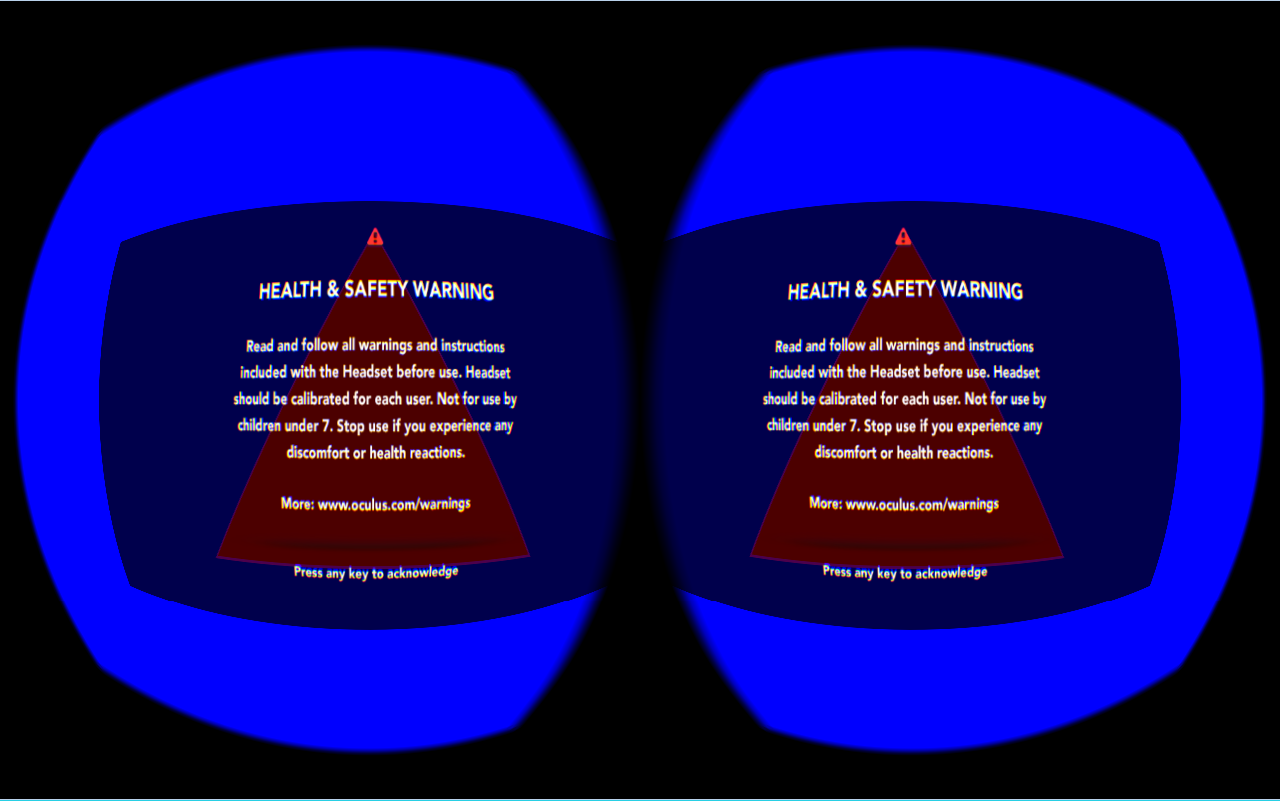

It also shows this at the start of every app:

… which introduces something like a 4 second delay to the start of any application. Which is a problem for a developer, who might run their app dozens or hundreds of times in a few hours. This is only an issue when using libOVR’s internal rendering engine (though I assume Oculus frowns on distributing software without something similar).

It looks like at there’s some intention to allow it to be disabled at runtime, via:

ovrHmd_EnableHSWDisplaySDKRender( hmd, false );

A good candidate to be triggered with a _DEBUG preprocessor define or something . . . but this isn’t currently exposed.

The next easiest way seems to be toggling an a #define at line 64 of /CAPI/CAPI_HSWDisplay.cpp:

#if !defined(HSWDISPLAY_DEFAULT_ENABLED) #define HSWDISPLAY_DEFAULT_ENABLED 0 // this used to be a 1 #endif

… then recompiling LibOVR. Which isn’t so bad — VS project files are provided, and worked with only minor tinkering (I had to add the DirectX SDK executables directory to the “VC++ directories” section of the project properties).

Recompiling the SDK works for what we’re doing, and it’s not hard, and we might need to modify libOVR eventually anyway . . . but it’s still a little silly.

Now that libOVR is building alongside some sample code, there’s some spelunking to do through the OculusWorldDemo to find how the new API suggests we modify eye parameters. Digging too deeply into the new C API, it looked like there may be other new (C++?) classes inside . . . and that’s probably deeper than we’re meant to access. Some shallower functions skimmed from OculusWorldDemo are currently being investigated.

(Note that this was actually tested with 0.4.1; I’ve to install the directX SDK on this other machine before testing against 0.4.2. I’ll probably remove this note once that’s done.)