I have always been very fascinated by computational design and generative art. That weird realization that these lines, curves, shapes are drawing themselves and they are acting intelligently based on a set of rules and interactive events with the user made me feel a little uneasy. Mostly, because as an architectural designer, we are pretty much only taught to simply point, click and draw and I couldn’t help but feel a little inadequate with the way I do things. Needless to say, we, designers who are used to create with their hands and minds use other processes and techniques to create and the end results can be just as beautiful as any sophisticated algorithm-based composition. But I still felt jealous. I wanted to try coding and I wanted my code to do stuff that is simply too difficult for me to do by hand.

This semester I will be learning Processing, a programming language designed for media design (images, animation, sound, data visualization, visual compositions, etc). It can create stunning interactive visual compositions that rival Picasso in their abstractness and randomness.But the real deal lies in the fact that with it, designers in all fields can implement algorithms to generate art in a straight-forward scripting environment. As it is built on top of Java, the first thing most programmers learn in school, I wanted to use this tool as a foundation for later high-level programming languages like Python, Javascript, and C#, which can help you do amazing things in 3D modeling software.

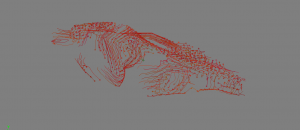

But learning Processing is just one part of it. I needed to fulfill a more specific goal. How can I use Processing to help me design architecture? Also, how can I make my project fit within the context of the Living Environment Lab? Well, programming languages are great for simulations, after all, almost all phenomena can be described in terms of a set of rules and that can be coded. For example, physics engines for games simulate physical interaction between objects based on a set of rules. Could I simulate an environment and a group of entities interacting with it according to guidelines, e.g., this thing will move away as soon as it comes within 50 pixels of distance from another entity, the group of entities spends most of its time in the center, or to the right, and so forth. The way we interact with our living environments is really all following a set of rules. What if I could create a floor plan that informs its parameters of form based on a simulation of the interaction between function of the space and users living in it? That’s how I came across this idea of simulating with ‘autonomous agents’, independent entities that behave according to rules in the code, and their application in the design of architectural form and space. My goal this semester is to create a visual composition that uses a number of agents and their rule-based interactions to generate form using Processing.

More on autonomous agents and agent-based modeling will be covered throughout the semester.