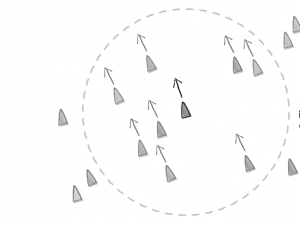

For the second composition, I had to do quite a lot of thinking. Rather than having agents respond to steering forces from other agents, I wanted to give them the ability to react to their previous positions or the trail they were leaving behind. If there’s anything that becomes obvious with the regular flocking algorithms, is that in most cases, the movement is very erratic. This happens because the steering vectors are actionable on a given agent as long as the source is within a predetermined radius. This is equivalent to saying that an agent can see has eyes everywhere.

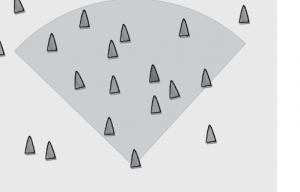

Rather than using this paradigm, it is convenient to implement an angle of vision to the agent behavioral methods:

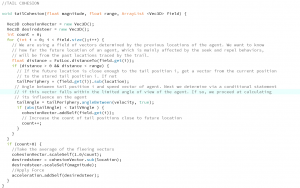

But before we get to how this was done in the behavioral methods, an important difference between this algorithm and the previous one is that the tail drives the movement of agent and this is done by first extrapolating a future location vector based on the current velocity of the agent:

The other important distinction of this flocking algorithm is that it kinda uses a pseudo pathfollowing behavior directed by trail position vectors. Basically, the agent keeps following the path it was originally set when speed was randomly selected at initialization. In practice, this gives us almost straight paths unless an attractor or repeller is nearby, in which case the steer will slowly make them change course.

To implement the angle of vision, I had to change the methods for separation and cohesion to include a calculation for the angle between the trail positions and the velocity:

The separation method uses the same principle.

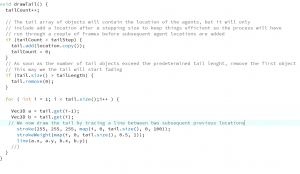

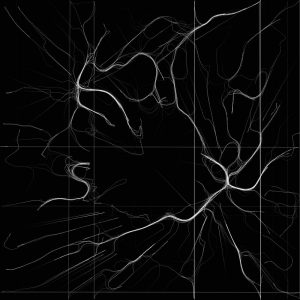

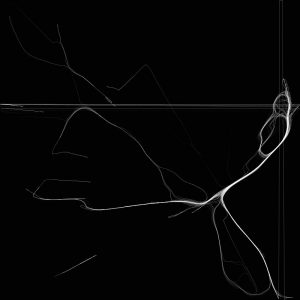

Lastly, I needed to include a method for tracing the tail. For some reason, I am having some artifacts in the composition which I haven’t been able to correct. Straight lines appear across the sketch.

The result is a really slick visualization of movement which for all intents and purposes comes fairly close to the way we navigate architectural spaces. We mostly walk in straight paths and occasionally make turns.