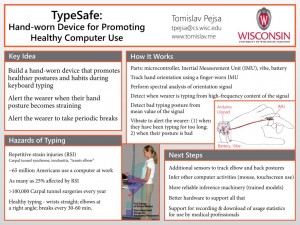

TypeSafe is a hand-worn device designed to improve the wearer’s computer usage habits by discouraging uncomfortable wrist postures and encouraging regular periods of rest during prolonged keyboard typing. The idea is to help the wearer avoid long-term health problems that can result from excessive and unhealthy computer usage, such as Carpal tunnel syndrome.

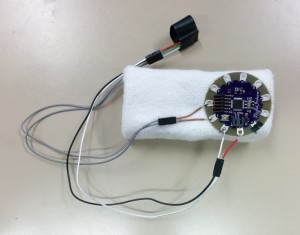

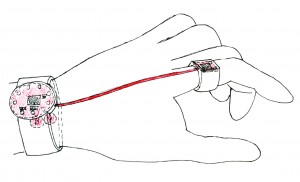

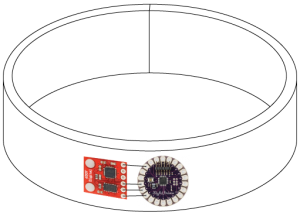

The device consists of a finger-worn inertial measurement unit (IMU) and a wristband containing an Arduino microcontroller, vibrating component, and battery. The IMU tracks the orientation of the wearer’s hand. The Arduino program analyzes the orientation data and infers the wearer’s hand posture and typing activity. When the hand posture is inferred to be”bad” (uncomfortable), the device alerts the wearer with vibration. Moreover, the device keeps track of how long the wearer has been typing and produces vibration to let the wearer know when they should take a break.

Both hand posture and activity inference are accomplished by performing analysis of the orientation signal. Current posture is classified as bad when orientation significantly departs from the neutral (healthy) hand posture, while typing activity is inferred from high-frequency content of the orientation signal.

The device currently exists as a fairly crude prototype. Although most of the planned features of the device are supported in the prototype, their implementation is still very basic and lacking in robustness. The original project plan foresaw several iterations on the prototype, during which I would have performed more principled collection and analysis of IMU data during everyday computer usage and development of more sophisticated inference machinery for postures and activities. However, these steps never occurred due to lack of time.

A technical hurdle which proved quite costly in terms of time was getting the IMU to work with the chosen microcontroller (Arduino LilyPad) and obtaining reliable orientation data. The solutions to this hurdle were relatively simple – changing the configuration of the microcontroller’s SDA and SCL pins, and making sure connections between the microcontroller and IMU were properly soldered. However, diagnosing and fixing these issues took long enough that less than two weeks remained for other steps on the project – data analysis, development of inference models, and user testing.

Given more time, I would make several improvements to the device:

– Use better sensors for posture tracking. IMU seems to be quite prone to drift, which makes it difficult to reliably track absolute posture. Flex sensors (https://www.sparkfun.com/products/10264) may be a better – and cheaper – alternative for this purpose.

– Introduce additional sensors for tracking postures of the elbows, shoulders, and the back, as these are also prone to repetitive strain during computer usage.

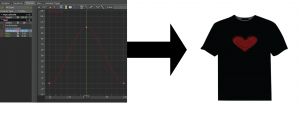

– Collect and analyze sensor data during real computer usage and develop trained models for posture and activity classification. This poses an interesting challenge, because these models must be fast and compact enough to run on a microcontroller with limited memory capacity and computing power.