- What each individual in the group worked on over the last week

- All four of us met in the lab this week (11/30/2016 – 7:00 am – 9:00am). Prior to the meeting each of us worked on our sub modules and in the lab we worked on combining the sub modules, integration and viewing through Occulus rift.

- Sub Modules worked on :

- Bixi – Add more text to the video.

- Ameya – Automatic appearance and disappearance of objects based on text.

- Zhicheng – Moving objects in a straight line.

- Shruthi – Mapping of objects to words encountered in streaming text.

- A description of the accomplishments made

- We were able to successfully automate appearance of subset of objects appearing in text.

- Subset of objects is formed by parsing the input text and forming a sub dictionary from the superset dictionary of pre created objects.

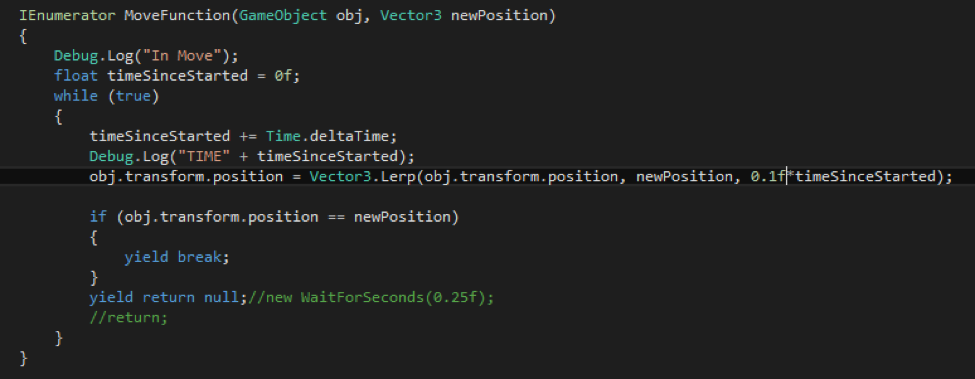

- We were also able to successfully move objects by defining our own method MoveFunction().

- A description of the problems encountered:

- Moving the object was not a trivial task initially.

- We had to cater to setting of the right timing and position to ensure smooth movement of the ball object.

- Mapping of words in input text to objects is possible currently, but with increased text length the mapping mechanism is not very scalable.

- The need to have a pre-instantiated range of objects contained in the main object dictionary is not a practical and scalable solution.

- We further worked on improving the experience by having stationary text at 3 different places in the video. Adjusting the size and placement seems to have quite an effect in making it look better. Last week we mentioned improvements (see plans for next week), however we wished to get started on the automation part and have delayed this for now.

- Plans for the upcoming week

- We intend to explore on dynamic creation and instantiation of objects rather than the static way in which we have done it now.

- Creation of multiple objects and simultaneous appearance of these multiple objects to co-exist in a scene at a particular instant.

- Creation of mulitple MoveFunction() corresponding to each object.

- Ability to move few objects and maintain the other stationary, all in relative to the frame the camera is positioned to view at.

- This is a push over from last week. We still wish to experiment with 2 alternate ways of displaying the text :

- Display text and video in alternate separate frames.

- Move the text with the camera. Allow the user to zoom in/out or move it using the controller.

- At least one piece of media related to your work (image, video, audio, etc)

Automatic text – animation mapping for the text – “The ball moves up”