- What each individual in the group worked on over the last week

- Ameya and Zhicheng – worked on capturing 360 degree images using photosphere in google camera, importing it into Unity and exploring the various features to explore the scene depicted in the image

- Bixi and Shruthi – worked on downloading a couple of 360 degree videos available from youTube, importing the video into Unity and interposing text onto subsequent frames in the 360 degree video.

- A description of the accomplishments made

- We were able to successfully interpose a 360 degree image captured using the photosphere option available in google camera onto the interior of a sphere in Unity as shown in Figure 1.

- We placed a camera inside the sphere and were able to view the scene in the 360 degree image by moving along various axis in Unity that corresponds to the placement of the camera as shown in Figure 2.

- We were also able to interpose text onto each frame of a 360 degree video by using Apple iMovies application. The media clip is embedded under point 5.

- A description of the problems encountered (this section may be short for this week as we are still very early)

- We are not able to import the video to Unity. It takes too long to import it into the Assets folder in the Project in Unity and subsequently Unity application hangs. After a restart of the system we tried to copy and paste the video into the Assets sub-folder in the Project folder via command line. Post that Unity application fails to recognize the Project as a valid project. We think this is due to some metadata corruption or inconsistency.

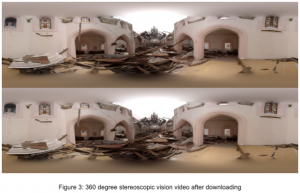

- Prior to downloading the 3D video appears in youTube as shown in Figure 4. After downloading we get to see the video as shown in Figure 3. We assume some preprocessing is being done by the video framework of youTube to transition video to look in Figure 3 to something as shown in Figure 4. We would like to understand the nuances in this transition.

- Plans for the upcoming week

- As an initial step we want to experiment with the Rift and check the experience we get from the existing photosphere implementation in Unity.

- We would also like to experiment with the Vive and choose the better among the 2.

- Next we intend to focus on implementing stereo vision in Unity. Figure 3 depicts a snapshot of one such video. By interposing the 2 stereo vision can be achieved.

- We wish to solve the issue of importing videos, so that we may move forward and extend the above 2 steps for every frame of the video, in effect achieving a 3D experience in the Rift.

- At least one piece of media related to your work (image, video, audio, etc)