Team Exploring Interfaces – Alex, Dave, Nicky, Ryan

Exploring Interface in Virtual Reality

Keyboard and mouse are the standard for 2D, non-immersive interfaces; do they work in 3D immersive interfaces, or are thumbsticks, or wands, or hand trackers best? Or maybe, what are they each best at? What kinds of things do we even want to do in VR?

Step 1: Explore the Current State of the Art

Step 2: Mock Up: tasks, input methods, and interfaces

Step 3: Test, with people

Example Interfaces:

Four interfaces, from top left and clockwise: Leap Motion Dragonfly AR demo, Motorcar VR window manager, Heavy Rain ARI, Iron Man poking at things with his eyes.

Note that the top two actually exist, and the bottom two are from fiction.

Example Complex Tasks:

What’s do we mean by “Mock Up”?

A small program that combines: atomic task, input method, interface mapping, evaluation.

In the complex task of 3D modeling, one atomic task might be selecting a point. Input methods might be a mouse or motion tracked hands. Interface mappings might be moving the points directly, or giving interface “handles” to make precise movements easier to describe. We might evaluate speed, accuracy, or comfort.

So we ask you to move points using a few different input methods, and see which works best. Which make you tired? Which make you faster? Which take less concentration?

Skills:

- we can code and things

What we can almost surely do:

- make at least one mock-up in Unity for the Oculus

- use “easy” input methods: keyboard, mouse, xbox controller, head tracking

What we can probably do:

- Some kind of survey of the state of the art, with some demos (compile motorcar?)

- More input methods:

- leap motion

- kinect

- a wand (wiimote?)

- passive haptics + hand tracking (if the interface is poking a sphere, actually have a physical sphere)

- More tasks:

- describe a rotation

- navigate menus

- position windows

- navigate in space

- What we can maybe do:

- integrate our findings with an existing interface

- make more interesting input methods (what can we rig with a kinect and some props?)

- some kind of demo that’s fun to play with

Equipment:

- Oculus Rift

- Maybe the CAVE?

- Unity

- Whatever input devices we can get working

- Maybe Blender for modeling?

Next Steps:

- start exploring

- brainstorm possible mock ups

- start building the Unity infrastructure we need

Links:

Motorcar:

https://github.com/evil0sheep/motorcar

Heavy Rain ARI:

Leap Dragonfly interface thing:

Magic Leap UI mock up:

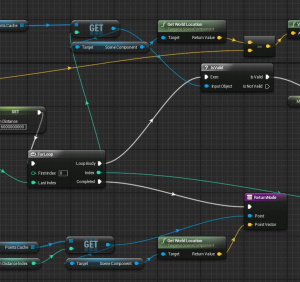

Unreal Engine Blueprints:

https://docs.unrealengine.com/latest/INT/Engine/Blueprints/index.html

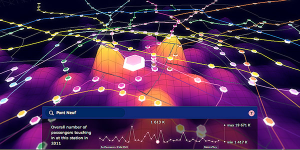

Paris Metro data visualization:

http://infosthetics.com/archives/2013/03/metropolitain_exploring_the_paris_metro_in_3d.html