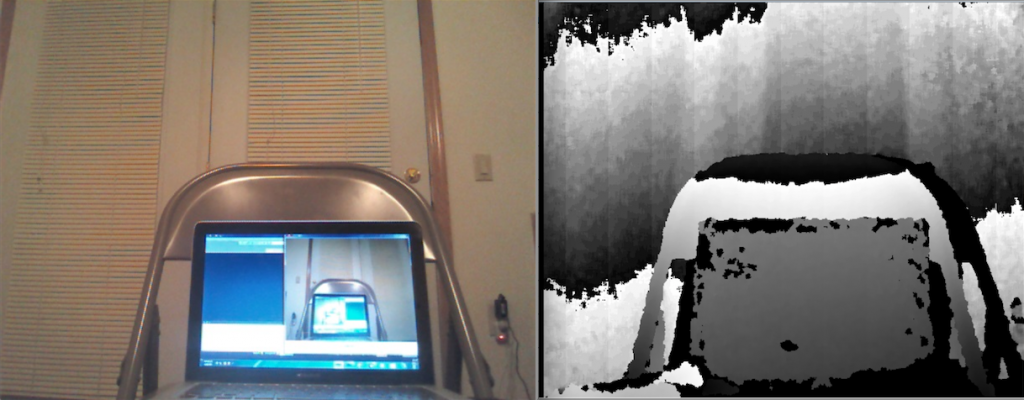

For my independent study project, I am interested in adding an additional input to the ZSpace, called Leap Motion. The ZSpace is a holographic display and allows the user to manipulate the object (via a stylus) in the virtual environment as if the object were right in front of them. By integrating the Leap Motion device, I hope to bring a more natural way to manipulate the object by using both hands.

Specific hand gestures are still being though of, but I envision that the Leap Motion could also draw objects it self via the index finger.

Leap Motion

ZSpace