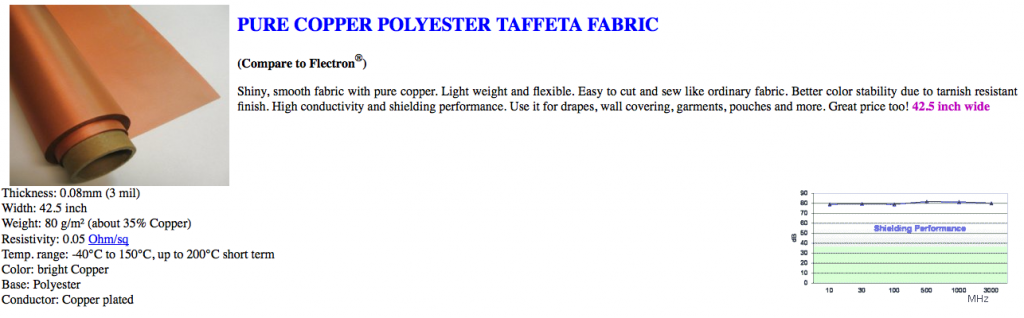

My first round of testing was etching conductive fabric to create circuits. I’m working with a sample of copper-coated taffeta from LessEMF.

Working off of this tutorial, I began by screen printing a design onto my taffeta with a resist, in this case Vaseline. I then soaked the taffeta in a vinegar & salt solution, which should etch away the unprotected copper coating, leaving behind the polyester fabric. The tutorial suggested a minimum etching time of 12 hours, however there was no effect in that time so I left the taffeta in the etching solution for several days.

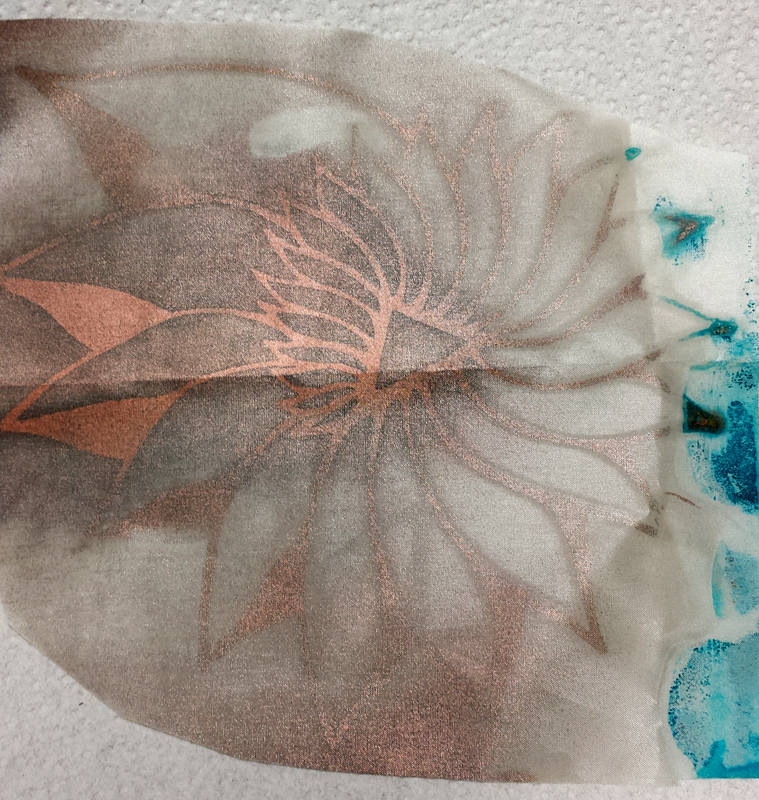

Here is my initial outcome:

As you can see, while the fabric is etched, the outcome is less than perfect. There are a few variables I am refining for my next test:

1. Solution mix – While the tutorial calls for a ratio of 100ml vinegar to 7ml salt, I transposed things in my mind and tried to mix a solution of 100ml vinegar to 70ml salt. Once I realized my error, I removed some of the excess salt. However, I am uncertain what the makeup of my final solution was.

2. Salt type – For the initial experiment, I used pickling salt because it was what I had on hand. For the next round I will be using standard iodized salt, in case the iodine content factors into the etch.

3. Container – The first test was executed in a basic Tupperware bowl, which I quickly realized is not the ideal container given the delicate nature of the Vaseline resist. Subsequent experiments will be conducted in a shallow pan.

4. Resist thickness – It may be beneficial to apply the Vaseline more liberally when printing, in order to better protect the copper from the etching solution.

Next steps:

I am going to put together a second round test, applying what I have learned from the initial experiment. Once I get a clean etch, I am going to experiment with a few different dye formulations to see if it is possible to color the white taffeta without affecting the conductivity of the copper plating. Also, I’d like to use a multimeter to test the conductivity across my etched design to start getting an idea of any potential size limitations.