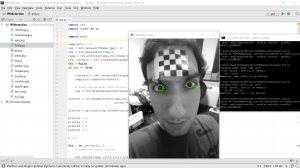

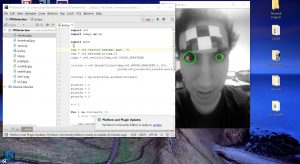

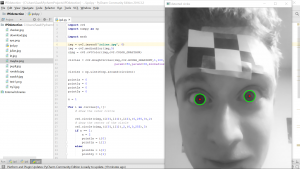

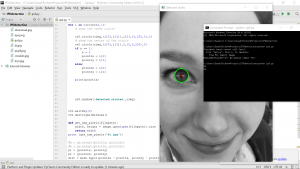

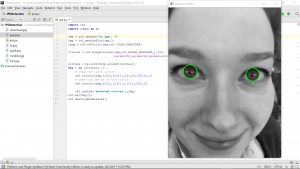

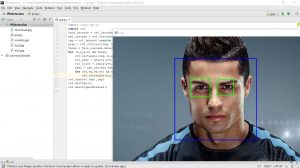

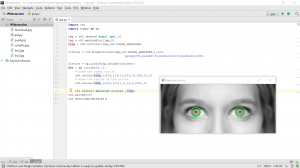

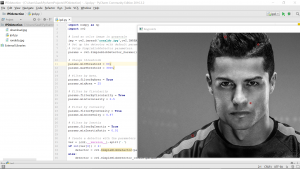

The goal of my final project was to create an IPD (inter-pupillary distance) calculator, i.e a program that detects and calculates the distance in millimeters between the two pupils of the subject / research participant. This is done through circle detection via Hough Circles, and some camera calibration (pixels to mm) through mouse input. I have accomplished this goal for the most part, with some non-major consistency issues.

Other accomplishments were learning how to use the OpenCV library (and coding libraries in general), and the language Python. I came into this project with negligible knowledge of python and its syntax/rules, and ending the semester I feel to have a much better grasp on the language. Finally, this was the first project that forced me to use and learn the command line, due to the nature of python.

2) Describe your overall feelings about the project. Are you happy, content, frustrated, etc with the results?

3) What were some of the largest hurdles you encountered over the semester and how did you approach these challenges.

As mentioned in answer 2 and in many of my blog posts, the biggest hurdle I encountered was inconsistent results. A temporary – although admittedly not great -solution I have used is to alter parameters in my methods used by image basis as needed.

Other big hurdles were finding and understanding documentation and applying them to my project. Most OpenCV resources available were for C++, and the ones I found for Python were often for Python 2 rather than 3. Similarly I often encountered issues adjusting my code as a lot of online assistance I found was in Python 2.

One scary hurdle involved having to urgently factory-reset my computer a few weeks ago after an onslaught of viruses and this forced me to reinstall OpenCV, numpy, PyCharm, and some other stuff. Fortunately, I had all my code backed up so none of that was lost.