Brief entry, I’m still kind’ve beat from quals.

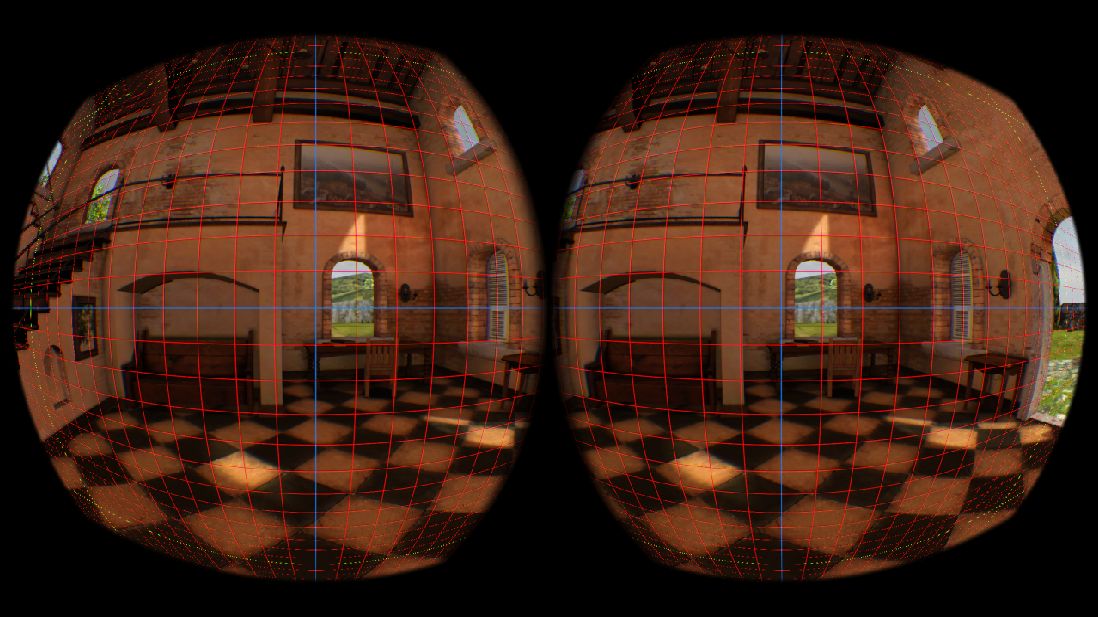

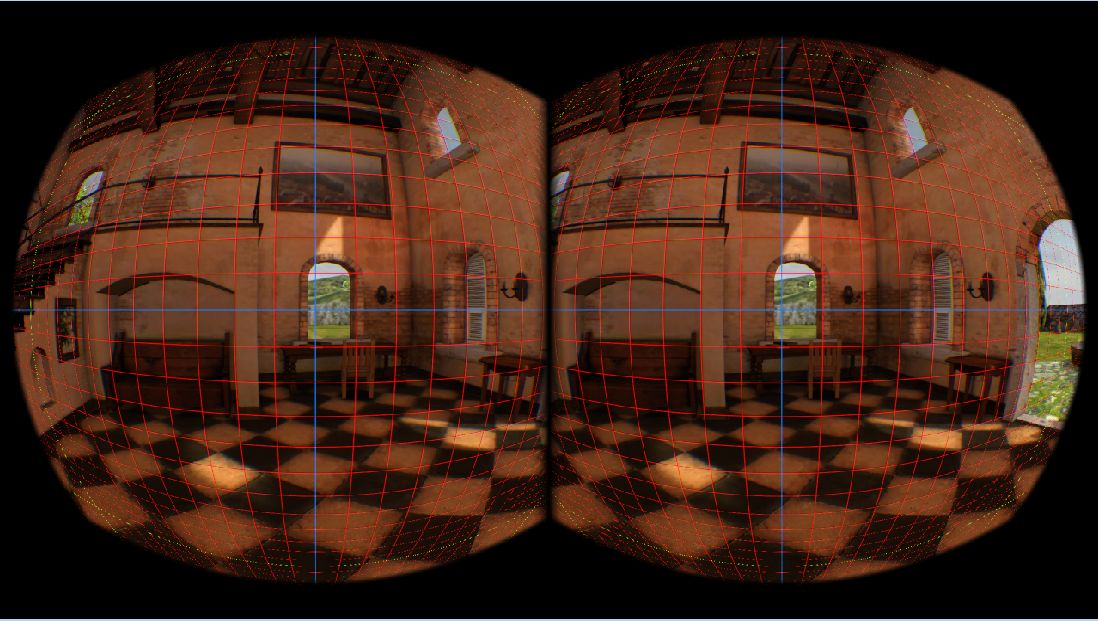

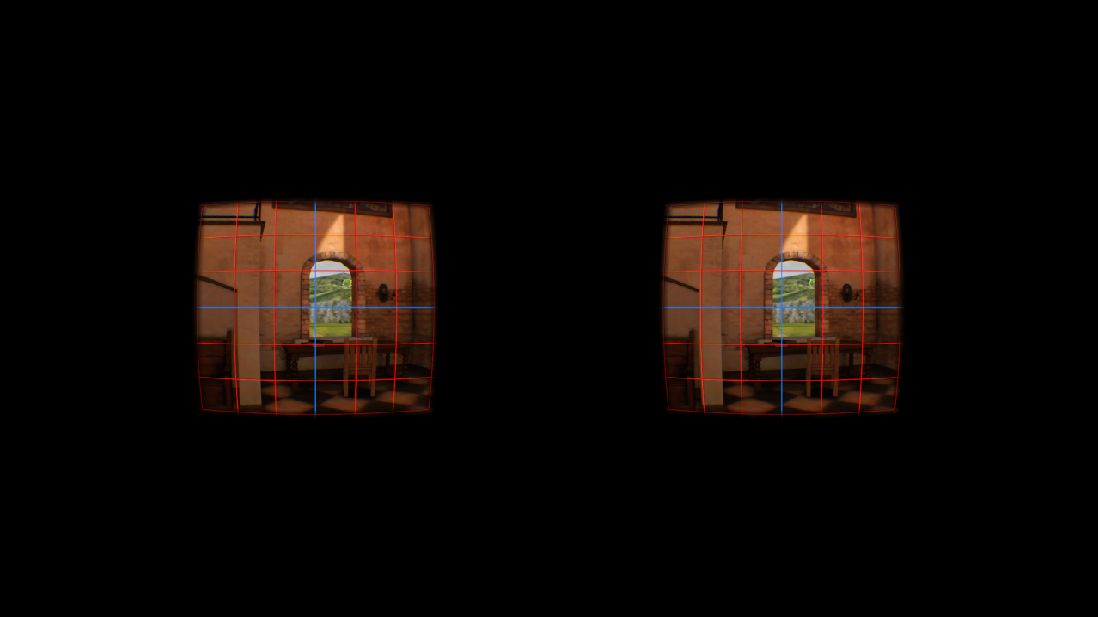

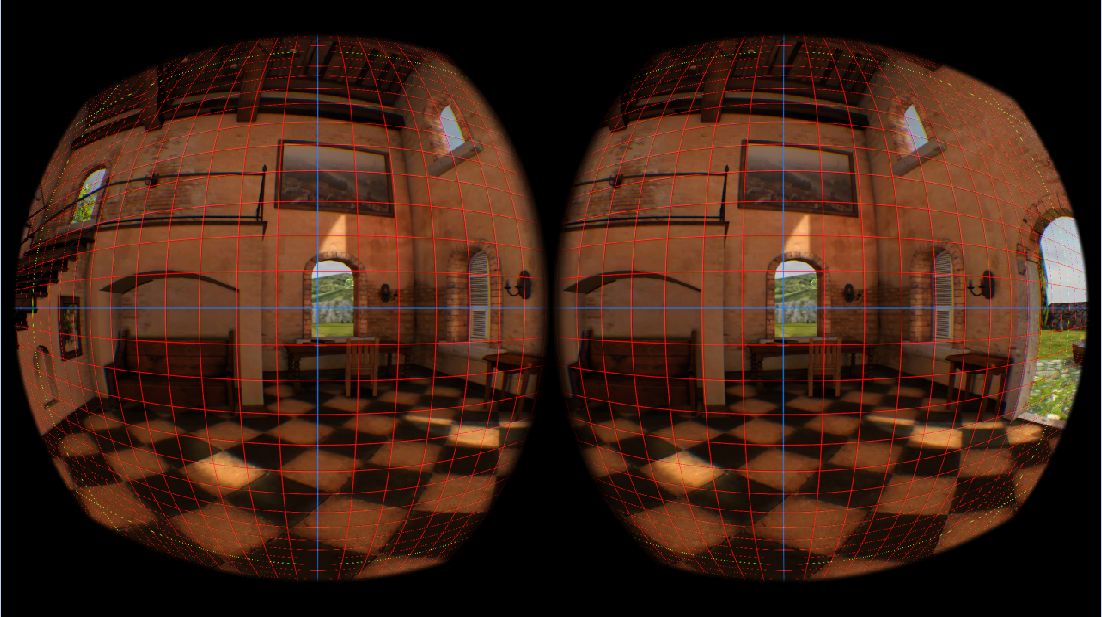

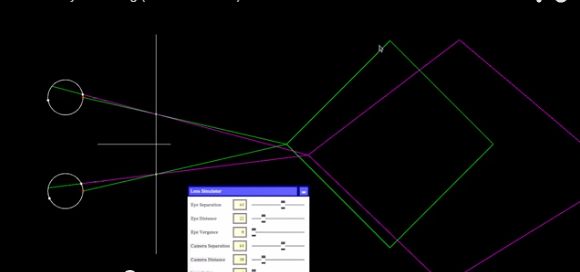

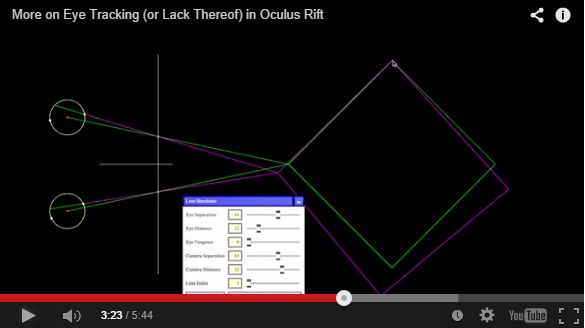

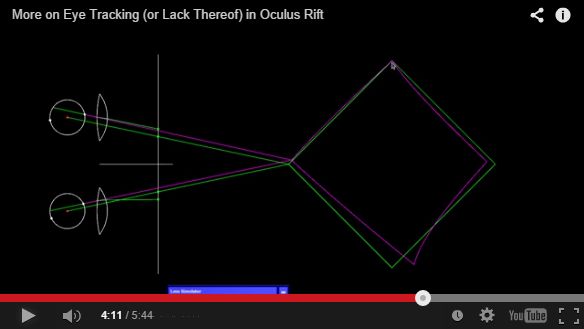

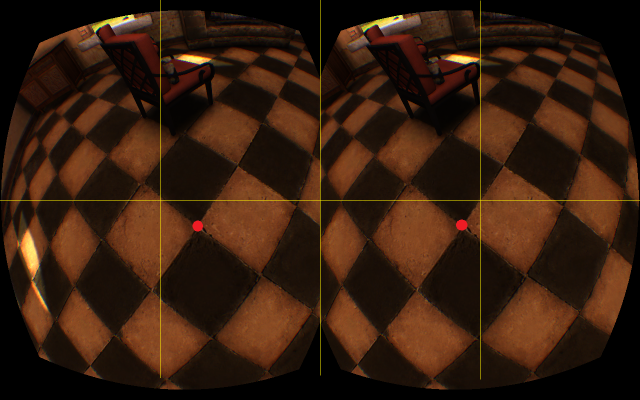

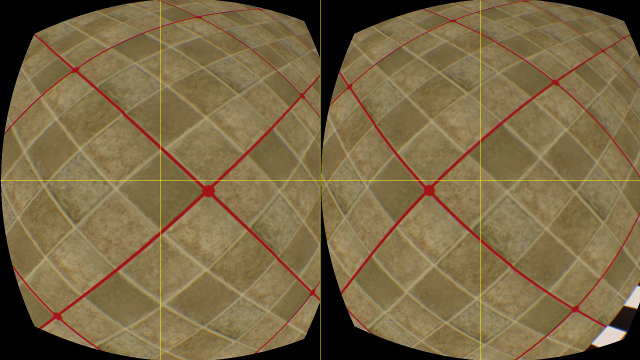

(Maybe I’ll flesh this entry out into a description of the Rift’s particular rendering peculiarities sometime — seems roughly three stages: virtual-to-screen, screen-to-lens, and lens-to-eye. But for now:)

Deep in the 0.4.2 Oculus Rift SDK lurk functions for setting display render properties. One of these functions has some illustrative comments.

FovPort CalculateFovFromEyePosition ( float eyeReliefInMeters,

float offsetToRightInMeters,

float offsetDownwardsInMeters,

float lensDiameterInMeters,

float extraEyeRotationInRadians /*= 0.0f*/ )

Returned is an FovPort, which describes a viewport’s field-of-view as the tangents of the angles between the viewing vector and the edges of the field of view — that is, four values for up, down, left, and right. The intent is summed up in another comment:

// 2D view of things:

// |-| <--- offsetToRightInMeters (in this case, it is negative)

// |=======C=======| <--- lens surface (C=center)

// \ | _/

// \ R _/

// \ | _/

// \ | _/

// \|/

// O <--- center of pupil

// (technically the lens is round rather than square, so it's not correct to

// separate vertical and horizontal like this, but it's close enough

Which shows an asymmetric view frustum determined by the eye’s position relative to the len’s. This seems to describe the physical field-of-view through the lens onto the screen; it’s unclear what other rendering properties this might influence (render target size is implied in a comment in a calling function, and it should also be expected to influence the distortion shader), but I’ve confirmed that it affects the projection matrix.

But then it gets a bit weird:

// That's the basic looking-straight-ahead eye position relative to the lens.

// But if you look left, the pupil moves left as the eyeball rotates, which

// means you can see more to the right than this geometry suggests.

// So add in the bounds for the extra movement of the pupil.

// Beyond 30 degrees does not increase FOV because the pupil starts moving backwards more than sideways.

// The rotation of the eye is a bit more complex than a simple circle. The center of rotation

// at 13.5mm from cornea is slightly further back than the actual center of the eye.

// Additionally the rotation contains a small lateral component as the muscles pull the eye

Which is where we see extraEyeRotationInRadians put to use; this may imply an interest in eye tracking.

Also, in the function that calls CalculateFovFromEyePosition:

// Limit the eye-relief to 6 mm for FOV calculations since this just tends to spread off-screen

// and get clamped anyways on DK1 (but in Unity it continues to spreads and causes

// unnecessarily large render targets)

So you aren’t allowed closer than 0.006 meters. They cite rendering target size concerns; are there also physical or visual arguments?

How close is even physically plausible? According to a single paper I just looked up at random: “eyelash length rarely exceeds 10 mm”. So at 6, seems there’s a good chance we’re within uncomfortable eyelash-touching territory — that is, closer than wearers are likely to want to be.

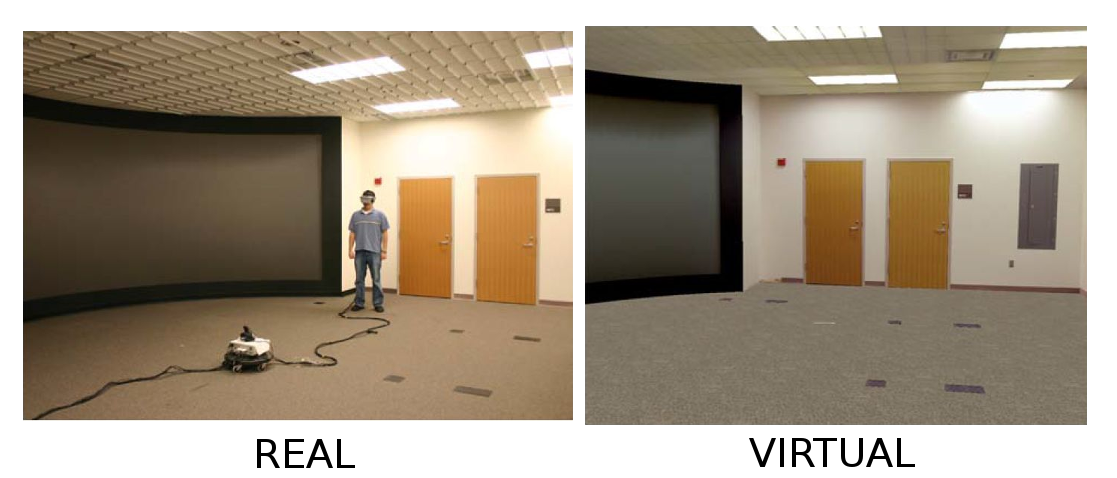

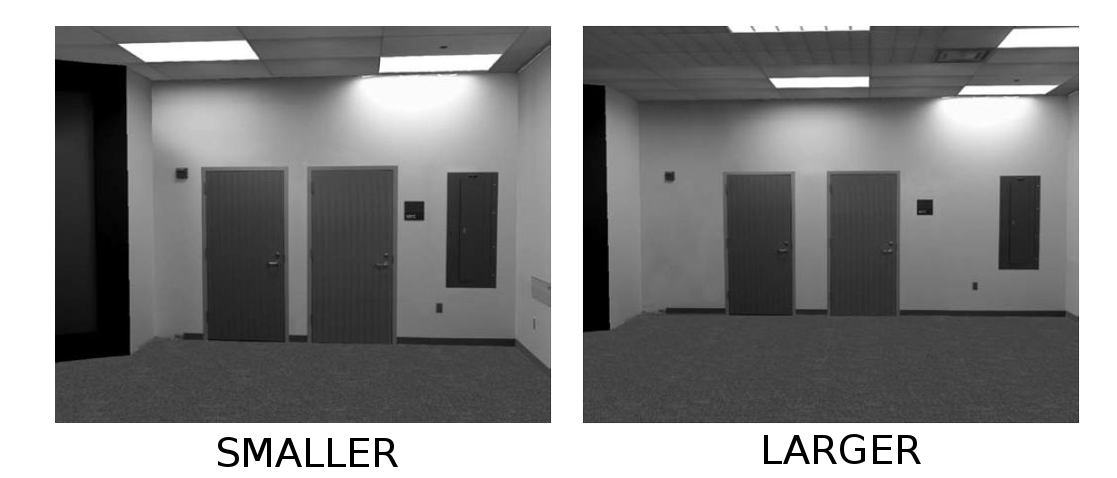

More directly relevant to the current work: what are the visual ramifications for lens-induced artifacts, for the rendered scene, and for any interplay between them. It looks like the Rift has aspirations to precisely calibrate its render to eye position, down to the lateral movement of the eye as it rotates. What happens if we de-sync the virtual and physical (or maybe: scene-to-screen and lens-to-eye) aspects of the calibration they’re so meticulously constructing?

More on that next week.

references:

Thibaut, S., De Becker, E., Caisey, L., Baras, D., Karatas, S., Jammayrac, O., … & Bernard, B. A. (2010). Human eyelash characterization. British Journal of Dermatology, 162(2), 304-310.