I’ve found a path by which the Oculus SDK generates the field of view (FOV):

CalculateFovFromHmdInfo calls CalculateFovFromEyePosition, then ClampToPhysicalScreenFov. (It also clamps eye relief to a max of 0.006 meters, which is thus far not reproduced in my code.)

All from OVR_Stereo.cpp/.h.

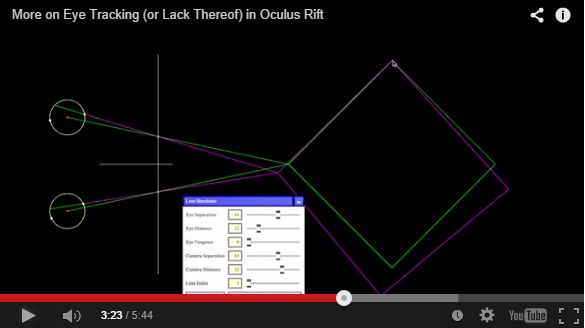

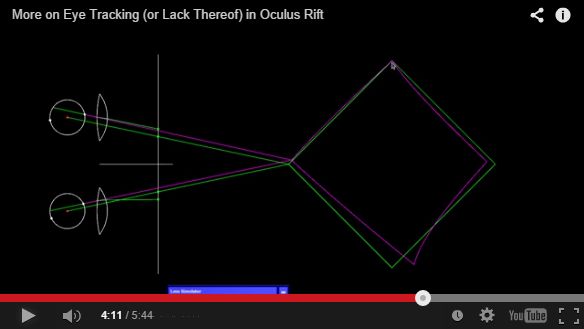

CalculateFovFromEyePosition calculates the FOV as four tangents from image center — up, down, left and right. Each is simply the offset from image center (lens radius + the eye offset from lens center) divided by the eye relief (distance from eye to lens surface). It also does that weird correction for eye rotation mentioned in an earlier post; the max of the corrected and uncorrected tangents are used.

ClampToPhysicalScreenFov estimates the physical screen FOV from a distortion (via GetPhysicalScreenFov). It returns the min of an input FOV and the estimated physical FOV.

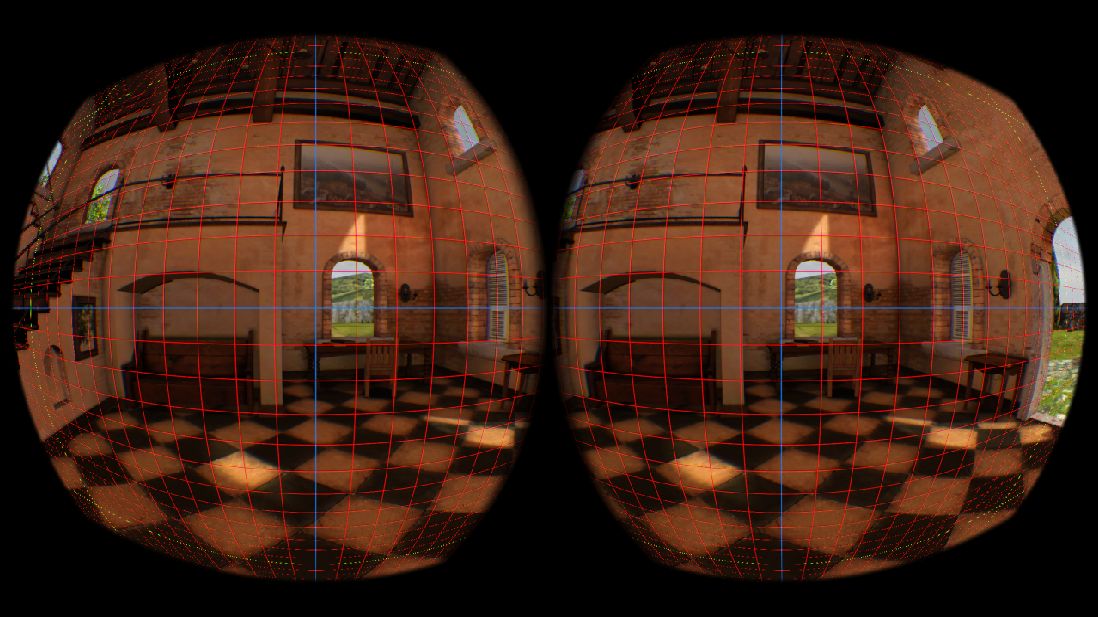

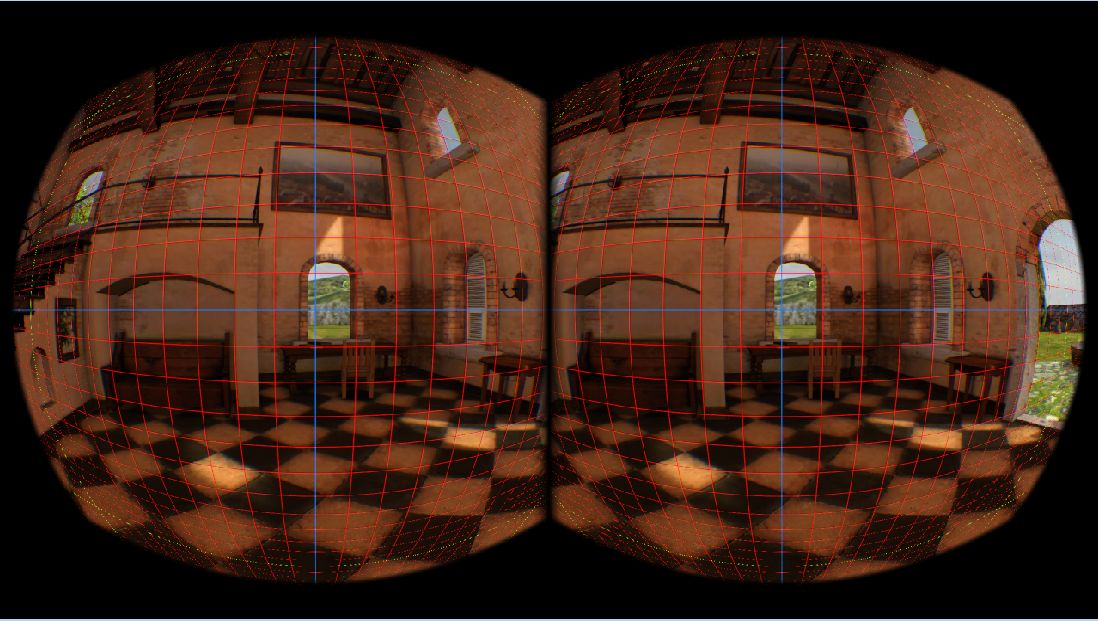

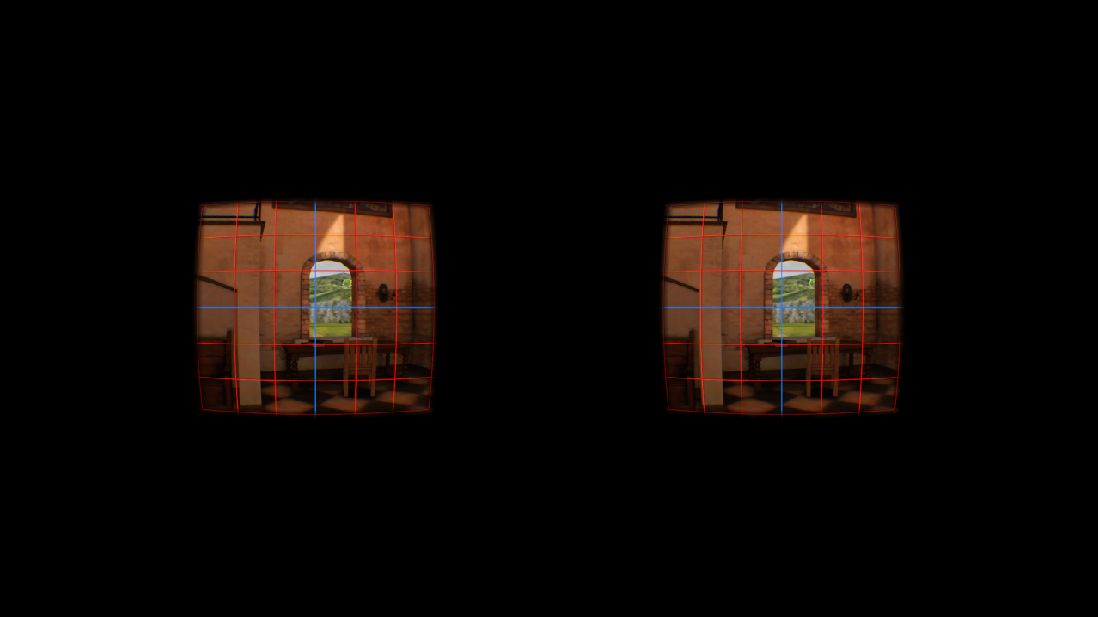

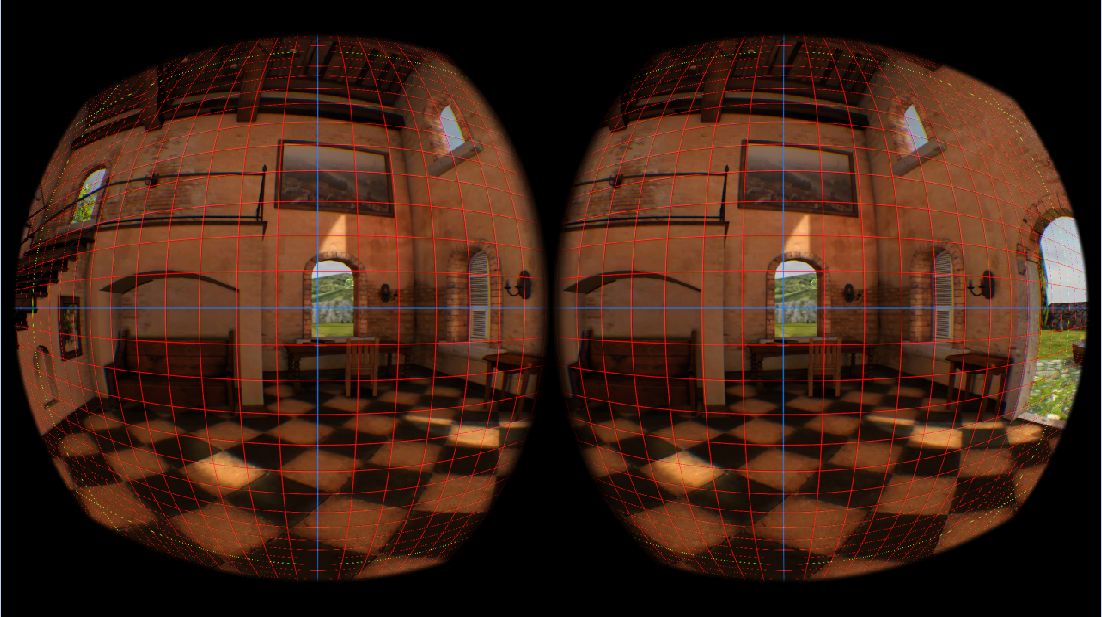

Last week’s images were made using Calculate, but not Clamp. Clamping makes my FOV match the default, but adds odd distortions outside of certain bounds for eye relief (ER) and offsets from image center (which I’m deriving from interpupillary distance (IPD). I haven’t yet thought much about why, but here’s some quick observations in the direction of when (all values in meters):

Values for ER less than -0.0018 result in a flipped image (the flipping is expected for negative values, so we would expect this to happen as soon as we dip below 0; the surprise is that it waits so long).

Values of ER greater than 0.019 cause vertical stretch, fairly uniform in magnitude between top and bottom, and modest rate of increase. It seems fairly gradual with the current stimulus.

Those both hold fairly well for all values of IPD. However, bounds on IPD are sensitive to ER.

At negative values up to -0.0018, IPD doesn’t cause distortions (tested for values >1 and <-0.7). It’s clearly entered some kind of weird state with negative ERs; something to keep in mind for future debugging / modeling, but we shouldn’t need negative ER directly.

At ER of 0.0001, IPD distorts outside of range 0.0125 to 0.1145.

At ER of 0.01, IPD distorts outside of range 0.034 to 0.094. (This ER is the Oculus SDK’s default.)

At ER of 0.019, IPD distorts outisde of range 0.0535 to 0.075.

Large IPD cause the image of both eyes to stretch away from the nose, small values towards. The distortion is drastic and increases fairly quickly with distance from the “safe” range of IPD values.

These ranges might be a little restrictive for our concerns, but should be workable; another worry is that the distortions may imply the clamping method itself is flawed.

We’ll also need to be aware of when things get clamped when designing experiments that care about specific values for IPD and ER.